Hello All

Here is a Testimonial (and HowTo) about installing a new NS8 VM based on the latest Debian 12.5 on a fairly low powered Proxmox hypervisor.

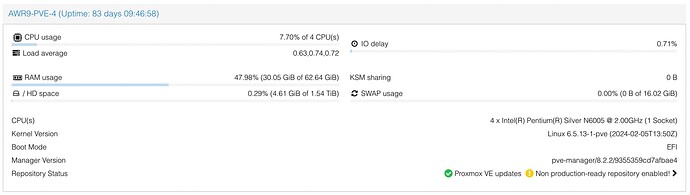

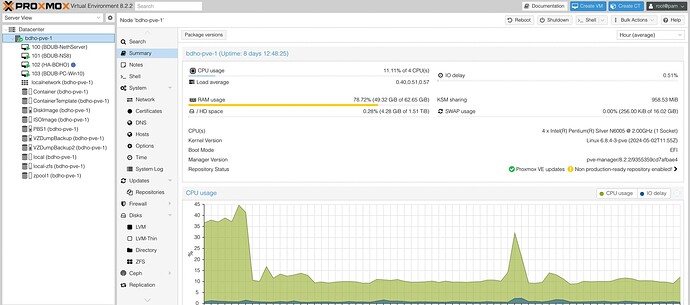

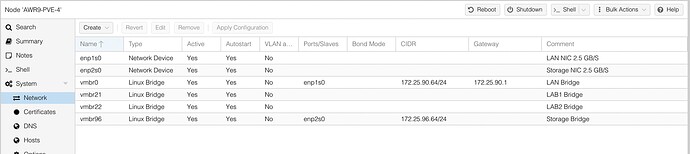

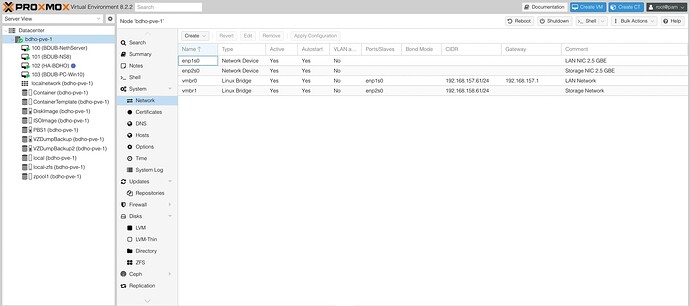

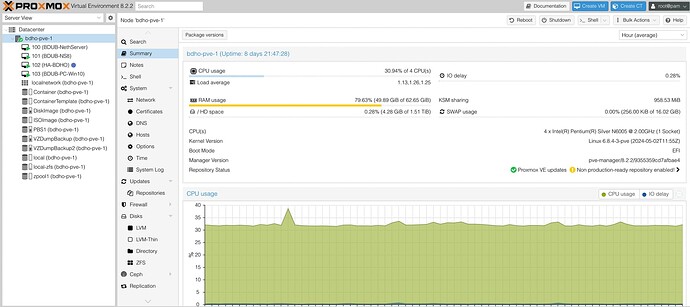

The basis for Proxmox is a small Odroid H3+ based box (Type7). Technical data / specs:

CPU: Quad Core Intel N6005 CPU 2 GHz

RAM: 64 GB

Storage: 2 TB Samsung NVME with Cooler

2 NICs with 2.5 GB/S

Storage is additionally available via dedicated 2.5 GB/S Storage LAN, but is NOT used in this use case.

Backup is available via NFS to an OpenMediaVault NAS, and PBS.

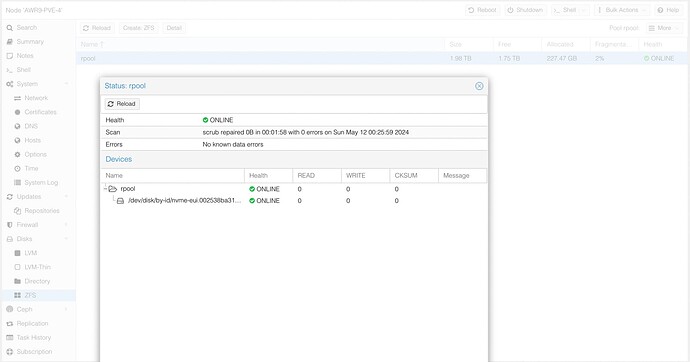

All local storage is configured as ZFS, with RAM usage limiting in place.

The system boots ZFS.

This system uses a max of 60W power!

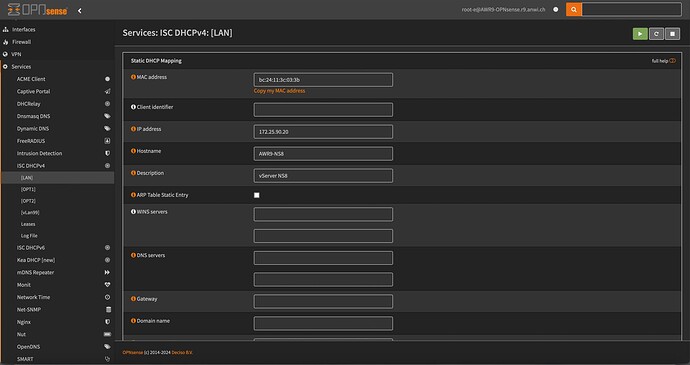

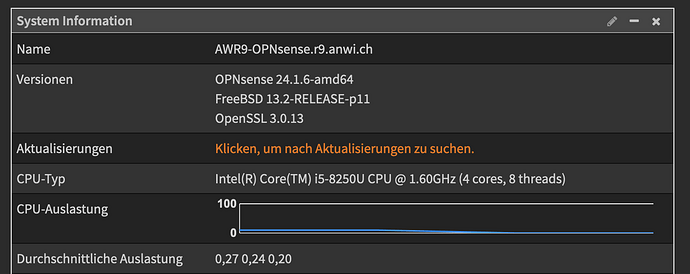

Firewall / DNS / DHCP is done at my home site with OPNsense.

I created a DHCP reservation for my NS8 VM, even though it is configured as static on the VM itself.

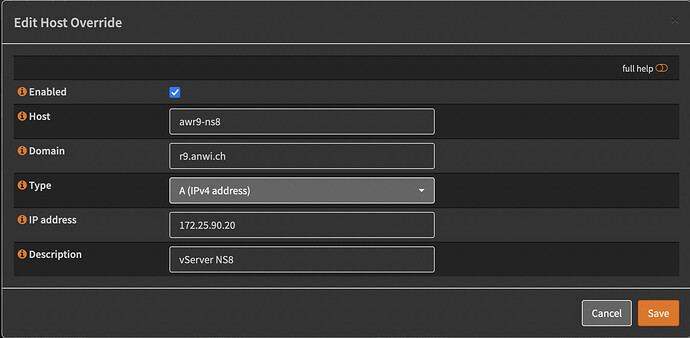

There is also a FQDN entry for the DNS before the actual installation.

The NS8 will be using 172.25.90.21/24 in this use case.

DHCP reservation

DNS (Unbound) entry:

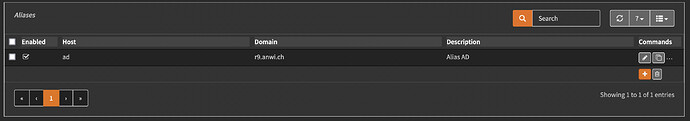

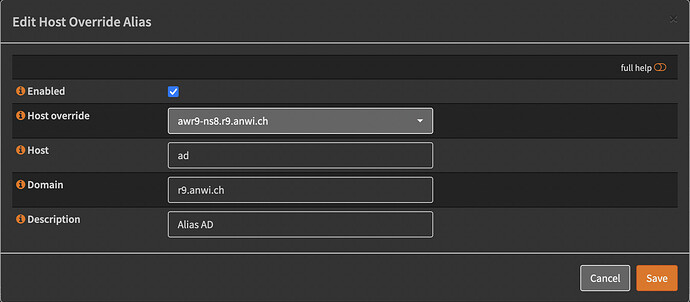

An Alias (CNAME) for the AD…

I’m including screenshots for my specific OPNsense, but they might help anyone interested using something else (NethSecurity, PFsense)…

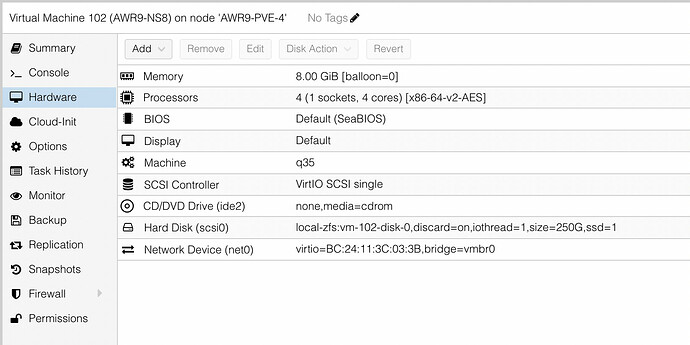

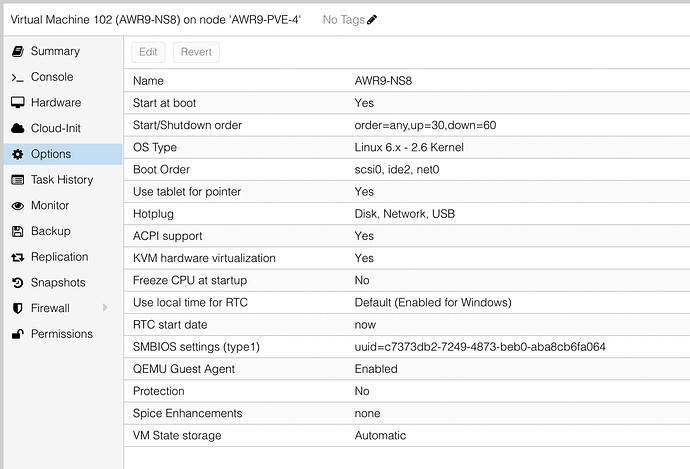

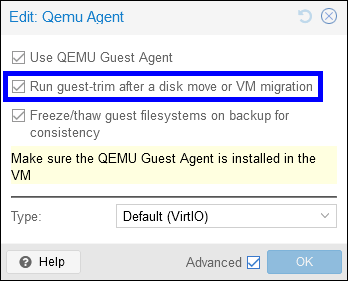

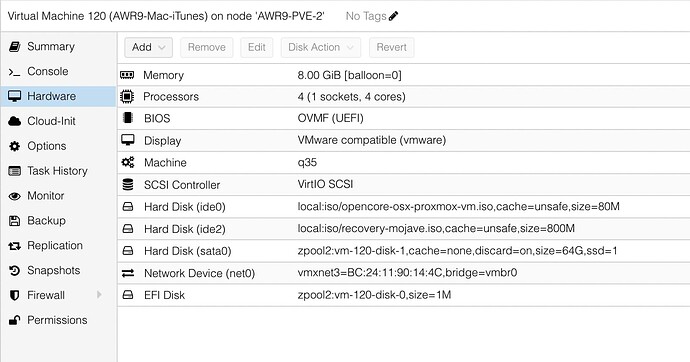

The VM was created on Proxmox with the following settings:

The install image is the latest Debian 12.5 ISO (Netinstall).

I use Debians GUI installation, here using local language settings (German / Swiss-German).

I use BtrFS as File System, all on a single VM Disk (200 GB, can be enlarged later if needed).

I choose a minimal system, removing both pre-set GUI options above, and choosing only SSH server as option.

After installation of Debian 12, I remove the ISO image, update the system with

apt update

and install what I need:

apt install mc nano htop screen snmp snmpd curl

Set SSH to allow root login

nano /etc/ssh/sshd_conf

and set the line

PermitRootlogin yes

save & close

and run

systemctl enable ssh

systemctl restart ssh

systemctl status ssh

I then also set snmp

mv /etc/snmp/snmpd.conf /etc/snmp/snmpd.conf_orig

nano /etc/snmp/snmpd.conf

Add in as needed:

rocommunity public

syscontact Admin <netmaster@anwi.ch>

syslocation ANWI Consulting R9

save & exit an set:

systemctl enable snmpd

systemctl restart snmpd

systemctl status snmpd

After a reboot, the Debian 12 is ready for NS8…

Due to a bug in the installation (Now officially fixed and distributed, so the “normal” method can be used as per instructions) I used the special command on the CLI (Via SSH).

This was part of testing, in coordination with @Tbaile / @davidep - many Thanks!

curl https://raw.githubusercontent.com/NethServer/ns8-core/main/core/install.sh > install.sh

bash install.sh ghcr.io/nethserver/core:latest ghcr.io/nethserver/traefik:setting-backoff-on-init

Not a single glitch!

Logging in the cluster I set up what I needed (This is NOT a migration from NS7!).

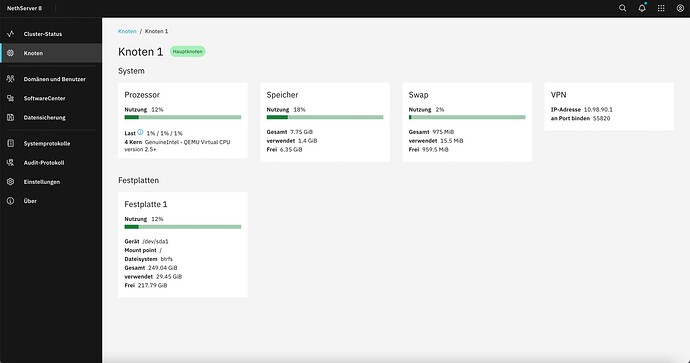

It looks like this now:

I’m using AD and FileServer and intend on using mail, nextcloud and maybe others…

There is still a lot to do, additional modules / apps I’ld like to install / test, and also setting up NS8’s own Backup. Main Backup will be PBS.

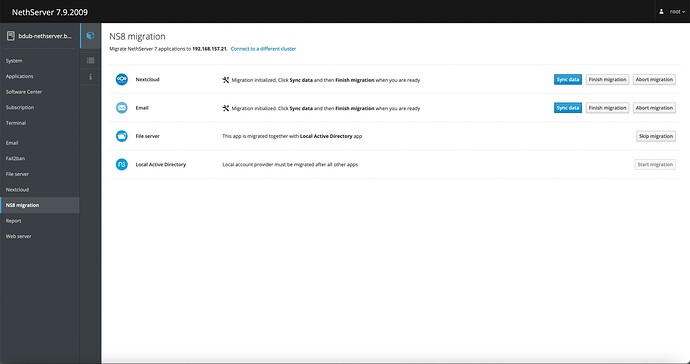

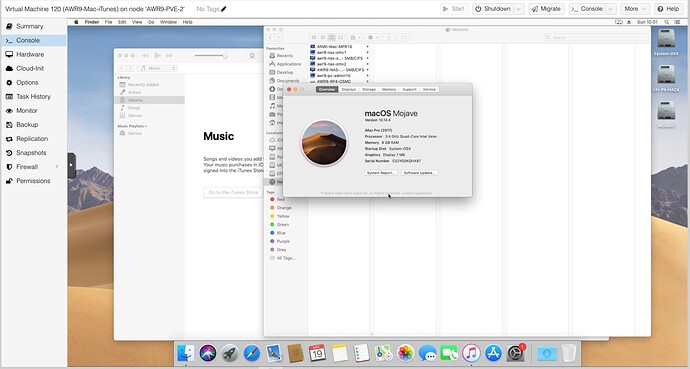

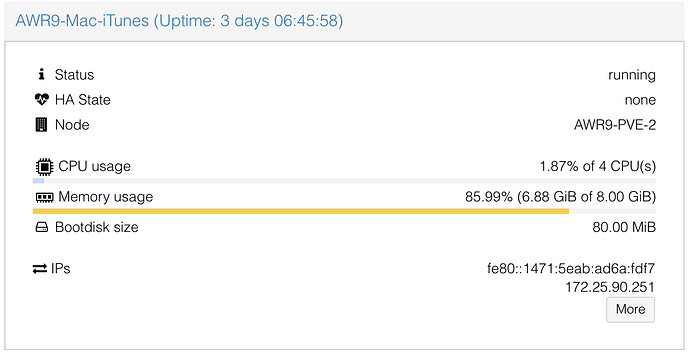

I would like to add, in the same time I prepared a migration for a client, using the same hardware at his SoHo, an Odroid H3+ with identical specs as mine above.

This case is a migration from NS7, which is also running on this small hardware. There is even a Win10 workstation running, for remote access / work.

The actuall migration is planned to be finalized this weekend. The current status:

Proxmox and NS8 are both Multitasking capable, but so am I, I initialized an additional 4 NS7 migrations to NS8 last night! ![]()

I hope to motivate people and other users with this small Testimonial / HowTo.

Especially for those interested on using Debian!

But also for those interested in using any of the other supported Rocky/Alma on such hardware for Home / Lab / SoHo use.

My 2 cents

Andy