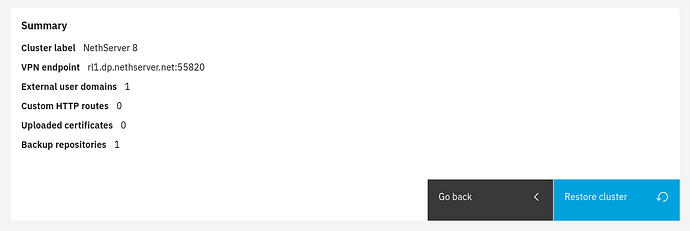

Since the problem with backup has apparently sorted itself out (see Backup failing in NS8), the obvious next step is to test the ability to restore. So I installed Debian 12 in a fresh VM with adequate disk space, installed NS8 on that, logged in to the cluster-admin page (who, oh who thought that asking for username and password on separate pages was a good idea?), and chose the option to restore the cluster.

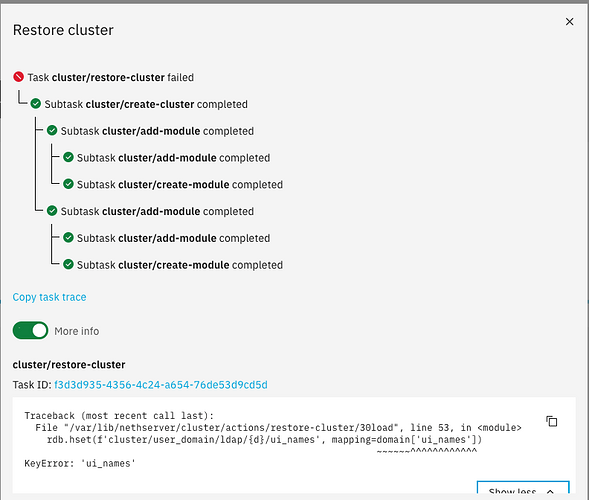

Uploaded the small backup file I’d downloaded from the first system, entered the password, and… almost instant failure. I get this:

…the result of “Copy task trace” is:

{"context":{"action":"restore-cluster","data":null,"extra":{"eventId":"6715dd05-54a6-40eb-b500-c86d2f29baa8","isNotificationHidden":true,"isProgressNotified":true,"title":"Restore cluster","toastTimeout":0},"id":"f3d3d935-4356-4c24-a654-76de53d9cd5d","parent":"","queue":"cluster/tasks","timestamp":"2024-02-19T12:47:42.438784244Z","user":"admin"},"status":"aborted","progress":89,"subTasks":[{"context":{"action":"create-cluster","data":{"endpoint":"ns8.familybrown.org:55820","network":"10.5.4.0/24"},"extra":{},"id":"cb46c26c-a593-4598-86f2-056a8403cf82","parent":"f3d3d935-4356-4c24-a654-76de53d9cd5d"},"status":"completed","progress":100,"subTasks":[{"context":{"action":"add-module","data":{"image":"ldapproxy","node":1},"extra":{},"id":"a19050b9-f675-40c3-aaba-89ed63d136fd","parent":"cb46c26c-a593-4598-86f2-056a8403cf82"},"status":"completed","progress":100,"subTasks":[{"context":{"action":"add-module","data":{"environment":{"IMAGE_DIGEST":"sha256:99802a5f2c061d21d679348c68f00599e85fedb2e4480498511c57fffd0fdaba","IMAGE_ID":"22e5405803dae42e6e918f3693bbf201b17eaa659aad4c0d235ddac2022a1a7d","IMAGE_REOPODIGEST":"ghcr.io/nethserver/ldapproxy@sha256:99802a5f2c061d21d679348c68f00599e85fedb2e4480498511c57fffd0fdaba","IMAGE_URL":"ghcr.io/nethserver/ldapproxy:1.0.0","MODULE_ID":"ldapproxy1","MODULE_UUID":"881c287e-e8be-4cfe-8d55-445c10376616","NODE_ID":"1","TCP_PORT":"20001","TCP_PORTS":"20001,20002,20003,20004,20005,20006,20007,20008","TCP_PORTS_RANGE":"20001-20008"},"is_rootfull":false,"module_id":"ldapproxy1"},"extra":{},"id":"aeebe782-6ffe-495f-814b-3ce77de2cd3c","parent":"a19050b9-f675-40c3-aaba-89ed63d136fd"},"status":"completed","progress":100,"subTasks":[],"result":{"error":"<7>useradd -m -k /etc/nethserver/skel -s /bin/bash ldapproxy1\n<7>extract-image ghcr.io/nethserver/ldapproxy:1.0.0\nExtracting container filesystem imageroot to /home/ldapproxy1/.config\nTotal bytes read: 30720 (30KiB, 3.2MiB/s)\nimageroot/actions/\nimageroot/actions/create-module/\nimageroot/actions/create-module/50start_service\nimageroot/bin/\nimageroot/bin/allocate-ports\nimageroot/bin/expand-template\nimageroot/bin/update-conf\nimageroot/events/\nimageroot/events/ldap-provider-changed/\nimageroot/events/ldap-provider-changed/10handler\nimageroot/events/service-ldap-changed/\nimageroot/events/service-ldap-changed/10handler\nimageroot/systemd/\nimageroot/systemd/user/\nimageroot/systemd/user/ldapproxy.service\nimageroot/templates/\nimageroot/templates/nginx.conf.j2\nimageroot/update-module.d/\nimageroot/update-module.d/50restart\nchanged ownership of './systemd/user/ldapproxy.service' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './update-module.d/50restart' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './update-module.d' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './actions/create-module/50start_service' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './actions/create-module' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './actions' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './templates/nginx.conf.j2' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './templates' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './.imageroot.lst' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './bin/update-conf' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './bin/allocate-ports' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './bin/expand-template' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './bin' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './state/environment' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './state/agent.env' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './events/ldap-provider-changed/10handler' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './events/ldap-provider-changed' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './events/service-ldap-changed/10handler' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './events/service-ldap-changed' from root:root to ldapproxy1:ldapproxy1\nchanged ownership of './events' from root:root to ldapproxy1:ldapproxy1\n0dd744424444421eb4061cb08fc8a6088dba02ecd8cd22fc7b25ad78725f6614\n<7>systemctl try-restart promtail.service\n<7>loginctl enable-linger ldapproxy1\n","exit_code":0,"file":"task/node/1/aeebe782-6ffe-495f-814b-3ce77de2cd3c","output":{"redis_sha256":"b603916c85fce7853be445bf78ce60ae25036ad6e817e65f81c859f086cfcf37"}}},{"context":{"action":"create-module","data":{"images":["docker.io/library/nginx:1.25.3-alpine"]},"extra":{},"id":"c4caaf13-8579-40bb-8587-cd72ebceb6e4","parent":"a19050b9-f675-40c3-aaba-89ed63d136fd"},"status":"completed","progress":100,"subTasks":[],"result":{"error":"Add to module/ldapproxy1 environment NGINX_IMAGE=docker.io/library/nginx:1.25.3-alpine\n<7>podman-pull-missing docker.io/library/nginx:1.25.3-alpine\nTrying to pull docker.io/library/nginx:1.25.3-alpine...\nGetting image source signatures\nCopying blob sha256:4ea31a8fb8756a19d3e7710cb8be2b66aea4e678cc836b88c8c8daa4b564d55b\nCopying blob sha256:9e03973bc8036b5eecce7b2d9996cc16108ad4fe366bafd5e0b972c57339404e\nCopying blob sha256:d18780149b8172369a5d5194091eb33adc7fcbfd32056d7eb3cedde75725bf82\nCopying blob sha256:619be1103602d98e1963557998c954c892b3872986c27365e9f651f5bc27cab8\nCopying blob sha256:a3a550dcd38681a0c4c41afd0f4977260b70ceec241359a0b35f443e865bdaff\nCopying blob sha256:6c866301bd2c1af4fb61766a45a078102eedab8c7b910c0796eab6ea99b14577\nCopying blob sha256:e57ebb3e206791646f52f35d99488b64a61ab4a1d39b7be52b15d82bd3b12988\nCopying blob sha256:efb7d60b16cfcd8e0daecf965b6f5575423bf03ac868cbd7def5803501b311b1\nCopying config sha256:2b70e4aaac6b5370bf3a556f5e13156692351696dd5d7c5530d117aa21772748\nWriting manifest to image destination\nStoring signatures\n2b70e4aaac6b5370bf3a556f5e13156692351696dd5d7c5530d117aa21772748\nCreated symlink /home/ldapproxy1/.config/systemd/user/default.target.wants/ldapproxy.service → /home/ldapproxy1/.config/systemd/user/ldapproxy.service.\n","exit_code":0,"file":"task/module/ldapproxy1/c4caaf13-8579-40bb-8587-cd72ebceb6e4","output":""}}],"result":{"error":"<7>podman-pull-missing ghcr.io/nethserver/ldapproxy:1.0.0\nTrying to pull ghcr.io/nethserver/ldapproxy:1.0.0...\nGetting image source signatures\nCopying blob sha256:bfaebb3ad7186ed4748057d09b472ad2f03aec10834380810357e786f74b3bef\nCopying config sha256:22e5405803dae42e6e918f3693bbf201b17eaa659aad4c0d235ddac2022a1a7d\nWriting manifest to image destination\nStoring signatures\n22e5405803dae42e6e918f3693bbf201b17eaa659aad4c0d235ddac2022a1a7d\n<7>extract-ui ghcr.io/nethserver/ldapproxy:1.0.0\nExtracting container filesystem ui to /var/lib/nethserver/cluster/ui/apps/ldapproxy1\nui/index.html\n409245cb8a8b6f62d2678b58e6079c07d48b48c14c0cb8ea5c82a6e83d1f464b\n","exit_code":0,"file":"task/cluster/a19050b9-f675-40c3-aaba-89ed63d136fd","output":{"image_name":"ldapproxy","image_url":"ghcr.io/nethserver/ldapproxy:1.0.0","module_id":"ldapproxy1"}}},{"context":{"action":"add-module","data":{"image":"loki","node":1},"extra":{},"id":"e417f791-d36c-447e-9a31-e34aa58968d5","parent":"cb46c26c-a593-4598-86f2-056a8403cf82"},"status":"completed","progress":100,"subTasks":[{"context":{"action":"add-module","data":{"environment":{"IMAGE_DIGEST":"sha256:25de762e9f2d5c0a28d89c7d97e7bc02269bc743da08e0eeda0cf20272d547be","IMAGE_ID":"9ccb9414fb0a931b747412513450d648d7df92ed8fff989f4598d917cc17173c","IMAGE_REOPODIGEST":"ghcr.io/nethserver/loki@sha256:25de762e9f2d5c0a28d89c7d97e7bc02269bc743da08e0eeda0cf20272d547be","IMAGE_URL":"ghcr.io/nethserver/loki:1.0.0","MODULE_ID":"loki1","MODULE_UUID":"9f9800e2-8e4c-4a01-9033-689be98ee4f3","NODE_ID":"1","TCP_PORT":"20009","TCP_PORTS":"20009"},"is_rootfull":false,"module_id":"loki1"},"extra":{},"id":"2fcf09d5-aa40-4893-9a1c-95618bed9c3f","parent":"e417f791-d36c-447e-9a31-e34aa58968d5"},"status":"completed","progress":100,"subTasks":[],"result":{"error":"<7>useradd -m -k /etc/nethserver/skel -s /bin/bash loki1\n<7>extract-image ghcr.io/nethserver/loki:1.0.0\nExtracting container filesystem imageroot to /home/loki1/.config\nTotal bytes read: 30720 (30KiB, 2.2MiB/s)\nimageroot/actions/\nimageroot/actions/create-module/\nimageroot/actions/create-module/10env\nimageroot/actions/create-module/20systemd\nimageroot/actions/restore-module/\nimageroot/actions/restore-module/06copyenv\nimageroot/etc/\nimageroot/etc/state-include.conf\nimageroot/scripts/\nimageroot/scripts/expandconfig-traefik\nimageroot/systemd/\nimageroot/systemd/user/\nimageroot/systemd/user/loki-server.service\nimageroot/systemd/user/loki.service\nimageroot/systemd/user/traefik.service\nimageroot/update-module.d/\nimageroot/update-module.d/20restart\nchanged ownership of './systemd/user/loki-server.service' from root:root to loki1:loki1\nchanged ownership of './systemd/user/traefik.service' from root:root to loki1:loki1\nchanged ownership of './systemd/user/loki.service' from root:root to loki1:loki1\nchanged ownership of './update-module.d/20restart' from root:root to loki1:loki1\nchanged ownership of './update-module.d' from root:root to loki1:loki1\nchanged ownership of './actions/restore-module/06copyenv' from root:root to loki1:loki1\nchanged ownership of './actions/restore-module' from root:root to loki1:loki1\nchanged ownership of './actions/create-module/10env' from root:root to loki1:loki1\nchanged ownership of './actions/create-module/20systemd' from root:root to loki1:loki1\nchanged ownership of './actions/create-module' from root:root to loki1:loki1\nchanged ownership of './actions' from root:root to loki1:loki1\nchanged ownership of './.imageroot.lst' from root:root to loki1:loki1\nchanged ownership of './etc/state-include.conf' from root:root to loki1:loki1\nchanged ownership of './etc' from root:root to loki1:loki1\nchanged ownership of './state/environment' from root:root to loki1:loki1\nchanged ownership of './state/agent.env' from root:root to loki1:loki1\nchanged ownership of './scripts/expandconfig-traefik' from root:root to loki1:loki1\nchanged ownership of './scripts' from root:root to loki1:loki1\n1adb375fa62e8154d521963f81be0c6466aeb66866c00911ccef264f238594d8\n<7>systemctl try-restart promtail.service\n<7>loginctl enable-linger loki1\n","exit_code":0,"file":"task/node/1/2fcf09d5-aa40-4893-9a1c-95618bed9c3f","output":{"redis_sha256":"b878f087f6b87100301b9acd7bb92844977aff4b9ee87b7349561a711cd7b4ec"}}},{"context":{"action":"create-module","data":{"images":["docker.io/traefik:v2.4","docker.io/grafana/loki:2.2.1"]},"extra":{},"id":"bc643eab-3ea7-4253-9c25-affccff6fb04","parent":"e417f791-d36c-447e-9a31-e34aa58968d5"},"status":"completed","progress":100,"subTasks":[],"result":{"error":"Add to module/loki1 environment TRAEFIK_IMAGE=docker.io/traefik:v2.4\nAdd to module/loki1 environment LOKI_IMAGE=docker.io/grafana/loki:2.2.1\n<7>podman-pull-missing docker.io/traefik:v2.4 docker.io/grafana/loki:2.2.1\nTrying to pull docker.io/library/traefik:v2.4...\nGetting image source signatures\nCopying blob sha256:fe4701c53ae539044a129428575f42a0e0aa5e923d04b97466915bf45f5df0e3\nCopying blob sha256:ddad3d7c1e96adf9153f8921a7c9790f880a390163df453be1566e9ef0d546e0\nCopying blob sha256:5f6722e60c2f6c55424dadebe886f88ba1b903df075b00048427439abb91b85a\nCopying blob sha256:3abdcd3bb40ca29c232ad12d1f2cba6efcb28e8d8ab7e5787ad2771b4e3862b0\nCopying config sha256:de1a7c9d5d63d8ab27b26f16474a74e78d252007d3a67ff08dcbad418eb335ae\nWriting manifest to image destination\nStoring signatures\nde1a7c9d5d63d8ab27b26f16474a74e78d252007d3a67ff08dcbad418eb335ae\nTrying to pull docker.io/grafana/loki:2.2.1...\nGetting image source signatures\nCopying blob sha256:753793ea21f66410ed4b05c828216728b6521b13a1de5939203258636f11eed5\nCopying blob sha256:e4f5d1b1114583aca9dafec1f0cc4f8a21ae15f5ea04b0ac236c148e09aa5f54\nCopying blob sha256:31603596830fc7e56753139f9c2c6bd3759e48a850659506ebfb885d1cf3aef5\nCopying blob sha256:858024693c419c0dd99a6d6ea88967d0f74cb3bdace948a115a6b7584019f644\nCopying blob sha256:8818f75b6e26a7de66dd46182944abe3a695bcafe01f1bf4cffcab489b34f960\nCopying blob sha256:e94f8da5a2cba4300c6e74d1eef04f2cc7f39cb1d08303f803f119fe3e06ca0f\nCopying blob sha256:586f67fcd52ef2996deebfe0c50f19ca0b2c59660e6efa9c76e580580dd8a5f9\nCopying config sha256:727c39682956d63917122ed8b23b916821f7c850bed426ace01fabfe81530790\nWriting manifest to image destination\nStoring signatures\n727c39682956d63917122ed8b23b916821f7c850bed426ace01fabfe81530790\nCreated symlink /home/loki1/.config/systemd/user/default.target.wants/loki.service → /home/loki1/.config/systemd/user/loki.service.\n","exit_code":0,"file":"task/module/loki1/bc643eab-3ea7-4253-9c25-affccff6fb04","output":""}}],"result":{"error":"<7>podman-pull-missing ghcr.io/nethserver/loki:1.0.0\nTrying to pull ghcr.io/nethserver/loki:1.0.0...\nGetting image source signatures\nCopying blob sha256:5d9f5409008a2b58d19f28940d31322902a17d140bd36d2996113a4cbd61a1d2\nCopying config sha256:9ccb9414fb0a931b747412513450d648d7df92ed8fff989f4598d917cc17173c\nWriting manifest to image destination\nStoring signatures\n9ccb9414fb0a931b747412513450d648d7df92ed8fff989f4598d917cc17173c\n<7>extract-ui ghcr.io/nethserver/loki:1.0.0\nExtracting container filesystem ui to /var/lib/nethserver/cluster/ui/apps/loki1\nui/index.html\nf4caed718f351573b4c5fa3a01ba0f2225b80e53d834408ba645cd030352a58f\n","exit_code":0,"file":"task/cluster/e417f791-d36c-447e-9a31-e34aa58968d5","output":{"image_name":"loki","image_url":"ghcr.io/nethserver/loki:1.0.0","module_id":"loki1"}}}],"result":{"error":"<7>sed -i '/- 127.0.0.1$/a\\ \\ \\ \\ \\ \\ \\ \\ - 10.5.4.0/24' /home/traefik1/.config/state/configs/_api.yml\n<7>sed -i -e '/cluster-localnode$/c\\10.5.4.1 cluster-localnode' /etc/hosts\n<7>systemctl stop wg-quick@wg0.service\n<7>systemctl start wg-quick@wg0.service\n<7>systemctl enable --now phonehome.timer\nCreated symlink /etc/systemd/system/timers.target.wants/phonehome.timer → /etc/systemd/system/phonehome.timer.\nCreated symlink /etc/systemd/system/multi-user.target.wants/wg-quick@wg0.service → /lib/systemd/system/wg-quick@.service.\nsuccess\nsuccess\nsuccess\nsuccess\nsuccess\n'./journal' -> '/var/log/journal'\nCreated symlink /etc/systemd/system/default.target.wants/promtail.service → /etc/systemd/system/promtail.service.\n","exit_code":0,"file":"task/cluster/cb46c26c-a593-4598-86f2-056a8403cf82","output":{"ip_address":"10.5.4.1"}}}],"validated":true,"result":{"error":"Traceback (most recent call last):\n File \"/var/lib/nethserver/cluster/actions/restore-cluster/30load\", line 53, in <module>\n rdb.hset(f'cluster/user_domain/ldap/{d}/ui_names', mapping=domain['ui_names'])\n ~~~~~~^^^^^^^^^^^^\nKeyError: 'ui_names'\n","exit_code":1,"file":"task/cluster/f3d3d935-4356-4c24-a654-76de53d9cd5d","output":""}}

…and though it’s visible in the screen shot above, the traceback shown under “More info” is:

Traceback (most recent call last):

File "/var/lib/nethserver/cluster/actions/restore-cluster/30load", line 53, in <module>

rdb.hset(f'cluster/user_domain/ldap/{d}/ui_names', mapping=domain['ui_names'])

~~~~~~^^^^^^^^^^^^

KeyError: 'ui_names'

…and apparently this is it; I can’t attempt another cluster restore without blowing away this VM and reinstalling NS8. That needs to be fixed.