Netserver 8,

we use since years USB-HD’s to backup Nethserver. (one at the machine, two at home). But since Update at ~05.08.2025 there is an alert for backup ! We could mount the USB-HD and see older backups, and its enough space left on it. A start by hand give this error list:

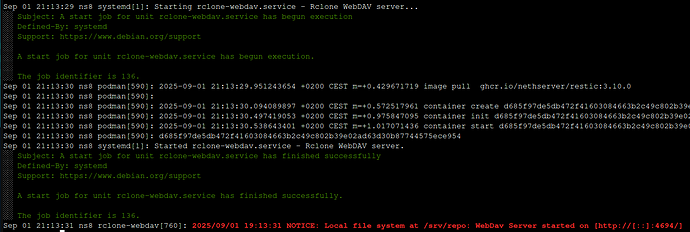

Task module/crowdsec1/run-backup run failed: {'output': '', 'error': 'restic snapshots\nrclone: 2025/08/11 11:13:40 CRITICAL: Failed to create file system for ":webdav:/crowdsec/f561a2ea-52dd-4daf-a862-6d755121783f": read metadata failed: Propfind "http://10.5.4.1:4694/crowdsec/f561a2ea-52dd-4daf-a862-6d755121783f": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: unable to open repository at rclone::webdav:/crowdsec/f561a2ea-52dd-4daf-a862-6d755121783f: error talking HTTP to rclone: exit status 1\nInitializing repository 86d1a8ac-ef89-557a-8e19-8582ab86b7c4 at path crowdsec/f561a2ea-52dd-4daf-a862-6d755121783f\nrestic init\nrclone: 2025/08/11 11:14:00 CRITICAL: Failed to create file system for ":webdav:/crowdsec/f561a2ea-52dd-4daf-a862-6d755121783f": read metadata failed: Propfind "http://10.5.4.1:4694/crowdsec/f561a2ea-52dd-4daf-a862-6d755121783f": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: create repository at rclone::webdav:/crowdsec/f561a2ea-52dd-4daf-a862-6d755121783f failed: Fatal: unable to open repository at rclone::webdav:/crowdsec/f561a2ea-52dd-4daf-a862-6d755121783f: error talking HTTP to rclone: exit status 1\n\n[ERROR] restic init failed. Command \'[\'podman\', \'run\', \'-i\', \'--rm\', \'--name=restic-crowdsec1-1452421\', \'--privileged\', \'--network=host\', \'--volume=restic-cache:/var/cache/restic\', \'--log-driver=none\', \'-e\', \'RESTIC_PASSWORD\', \'-e\', \'RESTIC_CACHE_DIR\', \'-e\', \'RESTIC_REPOSITORY\', \'-e\', \'RCLONE_WEBDAV_URL\', \'ghcr.io/nethserver/restic:3.9.2\', \'init\']\' returned non-zero exit status 1.\n', 'exit_code': 1}

Task module/mail1/run-backup run failed: {'output': 'Dumping Mail state to disk:\nSaving Maildir IMAP folder index:\nSaving service status:\n', 'error': 'Error: crun: setns(pid=3148, CLONE_NEWUSER): Operation not permitted: OCI permission denied\nrestic snapshots\nrclone: 2025/08/11 11:13:43 CRITICAL: Failed to create file system for ":webdav:/mail/159e4824-fc0d-427c-bfb5-84d8172a6d4f": read metadata failed: Propfind "http://10.5.4.1:4694/mail/159e4824-fc0d-427c-bfb5-84d8172a6d4f": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: unable to open repository at rclone::webdav:/mail/159e4824-fc0d-427c-bfb5-84d8172a6d4f: error talking HTTP to rclone: exit status 1\nInitializing repository 86d1a8ac-ef89-557a-8e19-8582ab86b7c4 at path mail/159e4824-fc0d-427c-bfb5-84d8172a6d4f\nrestic init\nrclone: 2025/08/11 11:14:00 CRITICAL: Failed to create file system for ":webdav:/mail/159e4824-fc0d-427c-bfb5-84d8172a6d4f": read metadata failed: Propfind "http://10.5.4.1:4694/mail/159e4824-fc0d-427c-bfb5-84d8172a6d4f": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: create repository at rclone::webdav:/mail/159e4824-fc0d-427c-bfb5-84d8172a6d4f failed: Fatal: unable to open repository at rclone::webdav:/mail/159e4824-fc0d-427c-bfb5-84d8172a6d4f: error talking HTTP to rclone: exit status 1\n\n[ERROR] restic init failed. Command \'[\'podman\', \'run\', \'-i\', \'--rm\', \'--name=restic-mail1-1452433\', \'--privileged\', \'--network=host\', \'--volume=restic-cache:/var/cache/restic\', \'--log-driver=none\', \'-e\', \'RESTIC_PASSWORD\', \'-e\', \'RESTIC_CACHE_DIR\', \'-e\', \'RESTIC_REPOSITORY\', \'-e\', \'RCLONE_WEBDAV_URL\', \'ghcr.io/nethserver/restic:3.9.2\', \'init\']\' returned non-zero exit status 1.\n', 'exit_code': 1}

Task module/imapsync1/run-backup run failed: {'output': '', 'error': 'restic snapshots\nrclone: 2025/08/11 11:13:38 CRITICAL: Failed to create file system for ":webdav:/imapsync/209e1bde-cb38-4456-8b71-68102013a203": read metadata failed: Propfind "http://10.5.4.1:4694/imapsync/209e1bde-cb38-4456-8b71-68102013a203": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: unable to open repository at rclone::webdav:/imapsync/209e1bde-cb38-4456-8b71-68102013a203: error talking HTTP to rclone: exit status 1\nInitializing repository 86d1a8ac-ef89-557a-8e19-8582ab86b7c4 at path imapsync/209e1bde-cb38-4456-8b71-68102013a203\nrestic init\nrclone: 2025/08/11 11:13:58 CRITICAL: Failed to create file system for ":webdav:/imapsync/209e1bde-cb38-4456-8b71-68102013a203": read metadata failed: Propfind "http://10.5.4.1:4694/imapsync/209e1bde-cb38-4456-8b71-68102013a203": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: create repository at rclone::webdav:/imapsync/209e1bde-cb38-4456-8b71-68102013a203 failed: Fatal: unable to open repository at rclone::webdav:/imapsync/209e1bde-cb38-4456-8b71-68102013a203: error talking HTTP to rclone: exit status 1\n\n[ERROR] restic init failed. Command \'[\'podman\', \'run\', \'-i\', \'--rm\', \'--name=restic-imapsync1-1452423\', \'--privileged\', \'--network=host\', \'--volume=restic-cache:/var/cache/restic\', \'--log-driver=none\', \'-e\', \'RESTIC_PASSWORD\', \'-e\', \'RESTIC_CACHE_DIR\', \'-e\', \'RESTIC_REPOSITORY\', \'-e\', \'RCLONE_WEBDAV_URL\', \'ghcr.io/nethserver/restic:3.9.2\', \'init\']\' returned non-zero exit status 1.\n', 'exit_code': 1}

Task module/openldap1/run-backup run failed: {'output': 'Dumping state to LDIF files:\n', 'error': 'restic snapshots\nrclone: 2025/08/11 11:13:42 CRITICAL: Failed to create file system for ":webdav:/openldap/b2878c23-bf64-4f7c-894e-8dad7a984243": read metadata failed: Propfind "http://10.5.4.1:4694/openldap/b2878c23-bf64-4f7c-894e-8dad7a984243": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: unable to open repository at rclone::webdav:/openldap/b2878c23-bf64-4f7c-894e-8dad7a984243: error talking HTTP to rclone: exit status 1\nInitializing repository 86d1a8ac-ef89-557a-8e19-8582ab86b7c4 at path openldap/b2878c23-bf64-4f7c-894e-8dad7a984243\nrestic init\nrclone: 2025/08/11 11:14:00 CRITICAL: Failed to create file system for ":webdav:/openldap/b2878c23-bf64-4f7c-894e-8dad7a984243": read metadata failed: Propfind "http://10.5.4.1:4694/openldap/b2878c23-bf64-4f7c-894e-8dad7a984243": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: create repository at rclone::webdav:/openldap/b2878c23-bf64-4f7c-894e-8dad7a984243 failed: Fatal: unable to open repository at rclone::webdav:/openldap/b2878c23-bf64-4f7c-894e-8dad7a984243: error talking HTTP to rclone: exit status 1\n\n[ERROR] restic init failed. Command \'[\'podman\', \'run\', \'-i\', \'--rm\', \'--name=restic-openldap1-1452422\', \'--privileged\', \'--network=host\', \'--volume=restic-cache:/var/cache/restic\', \'--log-driver=none\', \'-e\', \'RESTIC_PASSWORD\', \'-e\', \'RESTIC_CACHE_DIR\', \'-e\', \'RESTIC_REPOSITORY\', \'-e\', \'RCLONE_WEBDAV_URL\', \'ghcr.io/nethserver/restic:3.9.2\', \'init\']\' returned non-zero exit status 1.\n', 'exit_code': 1}

Task module/sogo1/run-backup run failed: {'output': '', 'error': 'restic snapshots\nrclone: 2025/08/11 11:13:42 CRITICAL: Failed to create file system for ":webdav:/sogo/b1530faa-9b71-46eb-b94d-a3d1292d0dcf": read metadata failed: Propfind "http://10.5.4.1:4694/sogo/b1530faa-9b71-46eb-b94d-a3d1292d0dcf": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: unable to open repository at rclone::webdav:/sogo/b1530faa-9b71-46eb-b94d-a3d1292d0dcf: error talking HTTP to rclone: exit status 1\nInitializing repository 86d1a8ac-ef89-557a-8e19-8582ab86b7c4 at path sogo/b1530faa-9b71-46eb-b94d-a3d1292d0dcf\nrestic init\nrclone: 2025/08/11 11:14:00 CRITICAL: Failed to create file system for ":webdav:/sogo/b1530faa-9b71-46eb-b94d-a3d1292d0dcf": read metadata failed: Propfind "http://10.5.4.1:4694/sogo/b1530faa-9b71-46eb-b94d-a3d1292d0dcf": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: create repository at rclone::webdav:/sogo/b1530faa-9b71-46eb-b94d-a3d1292d0dcf failed: Fatal: unable to open repository at rclone::webdav:/sogo/b1530faa-9b71-46eb-b94d-a3d1292d0dcf: error talking HTTP to rclone: exit status 1\n\n[ERROR] restic init failed. Command \'[\'podman\', \'run\', \'-i\', \'--rm\', \'--name=restic-sogo1-1452427\', \'--privileged\', \'--network=host\', \'--volume=restic-cache:/var/cache/restic\', \'--log-driver=none\', \'-e\', \'RESTIC_PASSWORD\', \'-e\', \'RESTIC_CACHE_DIR\', \'-e\', \'RESTIC_REPOSITORY\', \'-e\', \'RCLONE_WEBDAV_URL\', \'ghcr.io/nethserver/restic:3.9.2\', \'init\']\' returned non-zero exit status 1.\n', 'exit_code': 1}

Task module/loki1/run-backup run failed: {'output': '', 'error': 'restic snapshots\nrclone: 2025/08/11 11:13:39 CRITICAL: Failed to create file system for ":webdav:/loki/cc18d93b-fc25-478f-88a2-240f1a1bd292": read metadata failed: Propfind "http://10.5.4.1:4694/loki/cc18d93b-fc25-478f-88a2-240f1a1bd292": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: unable to open repository at rclone::webdav:/loki/cc18d93b-fc25-478f-88a2-240f1a1bd292: error talking HTTP to rclone: exit status 1\nInitializing repository 86d1a8ac-ef89-557a-8e19-8582ab86b7c4 at path loki/cc18d93b-fc25-478f-88a2-240f1a1bd292\nrestic init\nrclone: 2025/08/11 11:14:00 CRITICAL: Failed to create file system for ":webdav:/loki/cc18d93b-fc25-478f-88a2-240f1a1bd292": read metadata failed: Propfind "http://10.5.4.1:4694/loki/cc18d93b-fc25-478f-88a2-240f1a1bd292": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: create repository at rclone::webdav:/loki/cc18d93b-fc25-478f-88a2-240f1a1bd292 failed: Fatal: unable to open repository at rclone::webdav:/loki/cc18d93b-fc25-478f-88a2-240f1a1bd292: error talking HTTP to rclone: exit status 1\n\n[ERROR] restic init failed. Command \'[\'podman\', \'run\', \'-i\', \'--rm\', \'--name=restic-loki1-1452424\', \'--privileged\', \'--network=host\', \'--volume=restic-cache:/var/cache/restic\', \'--log-driver=none\', \'-e\', \'RESTIC_PASSWORD\', \'-e\', \'RESTIC_CACHE_DIR\', \'-e\', \'RESTIC_REPOSITORY\', \'-e\', \'RCLONE_WEBDAV_URL\', \'ghcr.io/nethserver/restic:3.9.2\', \'init\']\' returned non-zero exit status 1.\n', 'exit_code': 1}

Task module/traefik1/run-backup run failed: {'output': "mkdir: Verzeichnis 'state-backup' angelegt\n'traefik.yaml' -> 'state-backup/traefik.yaml'\n'configs' -> 'state-backup/configs'\n'configs/pasw.yml' -> 'state-backup/configs/pasw.yml'\n'configs/cluster-admin.yml' -> 'state-backup/configs/cluster-admin.yml'\n'configs/3CX.yml' -> 'state-backup/configs/3CX.yml'\n'configs/mail1-rspamd.yml' -> 'state-backup/configs/mail1-rspamd.yml'\n'configs/sogo1.yml' -> 'state-backup/configs/sogo1.yml'\n'configs/_http2https.yml' -> 'state-backup/configs/_http2https.yml'\n'configs/esweb.yml' -> 'state-backup/configs/esweb.yml'\n'configs/_default_cert.yml' -> 'state-backup/configs/_default_cert.yml'\n'configs/bma-cloud.yml' -> 'state-backup/configs/bma-cloud.yml'\n'configs/nextcloud2.yml' -> 'state-backup/configs/nextcloud2.yml'\n'configs/openldap1-amld.yml' -> 'state-backup/configs/openldap1-amld.yml'\n'configs/_api.yml' -> 'state-backup/configs/_api.yml'\n'manual_flags' -> 'state-backup/manual_flags'\n'manual_flags/pasw' -> 'state-backup/manual_flags/pasw'\n'manual_flags/esweb' -> 'state-backup/manual_flags/esweb'\n'manual_flags/bma-cloud' -> 'state-backup/manual_flags/bma-cloud'\n'manual_flags/3CX' -> 'state-backup/manual_flags/3CX'\n'custom_certificates' -> 'state-backup/custom_certificates'\n'acme' -> 'state-backup/acme'\n'acme/acme.json.acmejson-notify' -> 'state-backup/acme/acme.json.acmejson-notify'\n'acme/acme.json' -> 'state-backup/acme/acme.json'\n", 'error': 'restic snapshots\nrclone: 2025/08/11 11:13:38 CRITICAL: Failed to create file system for ":webdav:/traefik/198d25f3-bc54-428d-84d9-2f7ed5796bb7": read metadata failed: Propfind "http://10.5.4.1:4694/traefik/198d25f3-bc54-428d-84d9-2f7ed5796bb7": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: unable to open repository at rclone::webdav:/traefik/198d25f3-bc54-428d-84d9-2f7ed5796bb7: error talking HTTP to rclone: exit status 1\nInitializing repository 86d1a8ac-ef89-557a-8e19-8582ab86b7c4 at path traefik/198d25f3-bc54-428d-84d9-2f7ed5796bb7\nrestic init\nrclone: 2025/08/11 11:13:58 CRITICAL: Failed to create file system for ":webdav:/traefik/198d25f3-bc54-428d-84d9-2f7ed5796bb7": read metadata failed: Propfind "http://10.5.4.1:4694/traefik/198d25f3-bc54-428d-84d9-2f7ed5796bb7": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: create repository at rclone::webdav:/traefik/198d25f3-bc54-428d-84d9-2f7ed5796bb7 failed: Fatal: unable to open repository at rclone::webdav:/traefik/198d25f3-bc54-428d-84d9-2f7ed5796bb7: error talking HTTP to rclone: exit status 1\n\n[ERROR] restic init failed. Command \'[\'podman\', \'run\', \'-i\', \'--rm\', \'--name=restic-traefik1-1452426\', \'--privileged\', \'--network=host\', \'--volume=restic-cache:/var/cache/restic\', \'--log-driver=none\', \'-e\', \'RESTIC_PASSWORD\', \'-e\', \'RESTIC_CACHE_DIR\', \'-e\', \'RESTIC_REPOSITORY\', \'-e\', \'RCLONE_WEBDAV_URL\', \'ghcr.io/nethserver/restic:3.9.2\', \'init\']\' returned non-zero exit status 1.\n', 'exit_code': 1}

Task module/nextcloud2/run-backup run failed: {'output': '', 'error': 'restic snapshots\nTrying to pull ghcr.io/nethserver/restic:3.9.2...\nGetting image source signatures\nCopying blob sha256:b0f6f1c319a1570f67352d490370f0aeb5c0e67a087baf2d5f301ad51ec18858\nCopying blob sha256:2246b04badcba6c8a7d16e25fade69c25c34ce7d8ff8726511b2d85121150216\nCopying config sha256:363b1008aad8b214eac80680f5f3a721137c9465f0bd9c47548a894bf8a99ec0\nWriting manifest to image destination\nStoring signatures\nrclone: 2025/08/11 11:14:39 CRITICAL: Failed to create file system for ":webdav:/nextcloud/c76251d2-b877-46a2-b1b9-d82b149407d4": read metadata failed: Propfind "http://10.5.4.1:4694/nextcloud/c76251d2-b877-46a2-b1b9-d82b149407d4": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: unable to open repository at rclone::webdav:/nextcloud/c76251d2-b877-46a2-b1b9-d82b149407d4: error talking HTTP to rclone: exit status 1\nInitializing repository 86d1a8ac-ef89-557a-8e19-8582ab86b7c4 at path nextcloud/c76251d2-b877-46a2-b1b9-d82b149407d4\nrestic init\nrclone: 2025/08/11 11:14:45 CRITICAL: Failed to create file system for ":webdav:/nextcloud/c76251d2-b877-46a2-b1b9-d82b149407d4": read metadata failed: Propfind "http://10.5.4.1:4694/nextcloud/c76251d2-b877-46a2-b1b9-d82b149407d4": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: create repository at rclone::webdav:/nextcloud/c76251d2-b877-46a2-b1b9-d82b149407d4 failed: Fatal: unable to open repository at rclone::webdav:/nextcloud/c76251d2-b877-46a2-b1b9-d82b149407d4: error talking HTTP to rclone: exit status 1\n\n[ERROR] restic init failed. Command \'[\'podman\', \'run\', \'-i\', \'--rm\', \'--name=restic-nextcloud2-1452437\', \'--privileged\', \'--network=host\', \'--volume=restic-cache:/var/cache/restic\', \'--log-driver=none\', \'-e\', \'RESTIC_PASSWORD\', \'-e\', \'RESTIC_CACHE_DIR\', \'-e\', \'RESTIC_REPOSITORY\', \'-e\', \'RCLONE_WEBDAV_URL\', \'ghcr.io/nethserver/restic:3.9.2\', \'init\']\' returned non-zero exit status 1.\n', 'exit_code': 1}

Task module/dnsmasq1/run-backup run failed: {'output': '', 'error': 'restic snapshots\nrclone: 2025/08/11 11:13:36 CRITICAL: Failed to create file system for ":webdav:/dnsmasq/dbf1ae4b-edb6-44aa-b9ac-c3ed6d1d46a3": read metadata failed: Propfind "http://10.5.4.1:4694/dnsmasq/dbf1ae4b-edb6-44aa-b9ac-c3ed6d1d46a3": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: unable to open repository at rclone::webdav:/dnsmasq/dbf1ae4b-edb6-44aa-b9ac-c3ed6d1d46a3: error talking HTTP to rclone: exit status 1\nInitializing repository 86d1a8ac-ef89-557a-8e19-8582ab86b7c4 at path dnsmasq/dbf1ae4b-edb6-44aa-b9ac-c3ed6d1d46a3\nrestic init\nrclone: 2025/08/11 11:13:43 CRITICAL: Failed to create file system for ":webdav:/dnsmasq/dbf1ae4b-edb6-44aa-b9ac-c3ed6d1d46a3": read metadata failed: Propfind "http://10.5.4.1:4694/dnsmasq/dbf1ae4b-edb6-44aa-b9ac-c3ed6d1d46a3": dial tcp 10.5.4.1:4694: connect: connection refused\nFatal: create repository at rclone::webdav:/dnsmasq/dbf1ae4b-edb6-44aa-b9ac-c3ed6d1d46a3 failed: Fatal: unable to open repository at rclone::webdav:/dnsmasq/dbf1ae4b-edb6-44aa-b9ac-c3ed6d1d46a3: error talking HTTP to rclone: exit status 1\n\n[ERROR] restic init failed. Command \'[\'podman\', \'run\', \'-i\', \'--rm\', \'--name=restic-dnsmasq1-1452425\', \'--privileged\', \'--network=host\', \'--volume=restic-cache:/var/cache/restic\', \'--log-driver=none\', \'-e\', \'RESTIC_PASSWORD\', \'-e\', \'RESTIC_CACHE_DIR\', \'-e\', \'RESTIC_REPOSITORY\', \'-e\', \'RCLONE_WEBDAV_URL\', \'ghcr.io/nethserver/restic:3.9.2\', \'init\']\' returned non-zero exit status 1.\n', 'exit_code': 1}

9

What is wrong ? Howto find the reason ?