@michelandre

@pike

Hi Guys

Sorry for the delay in replying, was on a lengthy support call…

In the two cases involved, the massive data accumulation were due to wiring issues.

In one case, the network wiring in the wall was done by an electrician around 2000 (20 years ago).

What a lot of people forget is that in wall wiring is nothing else but a network cable hidden from view. It could be damaged, corroded, moist, broken… You don’t see it!

In this case, the wiring was done to Cat6 specs, read 1 GB/S…

The quality must have decayed over time and wasn’t noticed until the provider announced a 100% Internet Speed upgrade. They have fiber glass and 300 MB/S now.

They complained to the provider that no speed increase was noticable, and the provider said hook up a PC / Notebook directly to the Fiber-Modem. Speed was there!

My Client asked me if the 3 Months old new Firewall was crap… I told him, hook up your PC/Notebook to the firewall. Speed was there.

After the Fiber-Modem, the wiring went into our OPNsense Firewall, from ther to a wall socket leading to the server room.

And in the Server room: No more speed. The Wall cabling had deteriorated down to about 120MBit/S… A lot of runts and crap on the ethernet due to defective cabling.

An electrician put in new wiring, all worked…

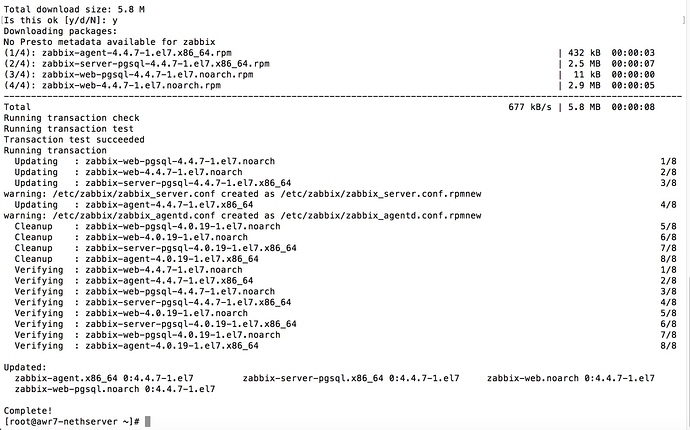

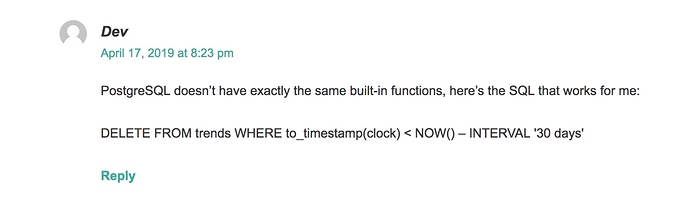

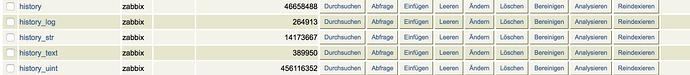

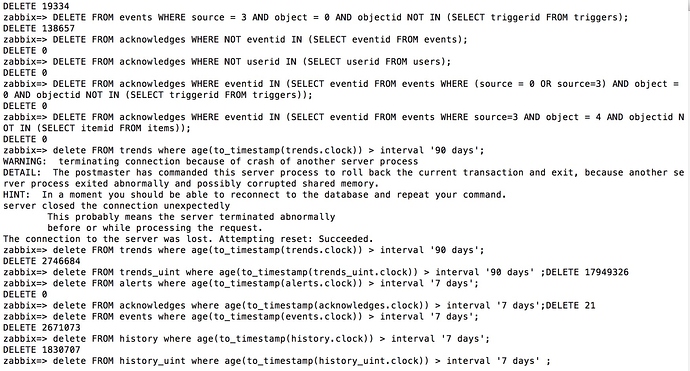

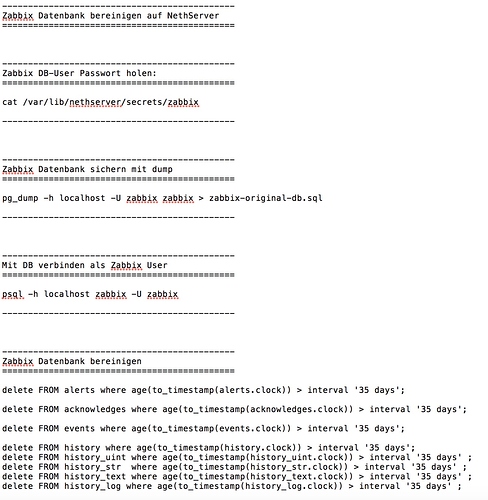

What we didn’t think about at the time was how much data had acumated in Zabbix due to those abundant errors!

In the other case also something similiar, a 35 year old building…

Sh*t happens…

But still needs to be cleaned up…

The Networks have only about 10-15 PCs, are fairly small…

My 2 cents

Andy