@france

Hi Francesco

90% of my 30 clients are using Proxmox exclusively with external storage. This is a Synology NAS (Most 4-8 bays, no smaller NAS used), and the NAS is connected to LAN (for Administration) and directly to the Proxmox. The larger models have 4 NICs, I use these then BONDED…

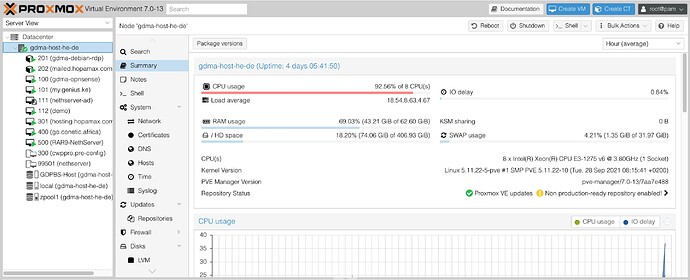

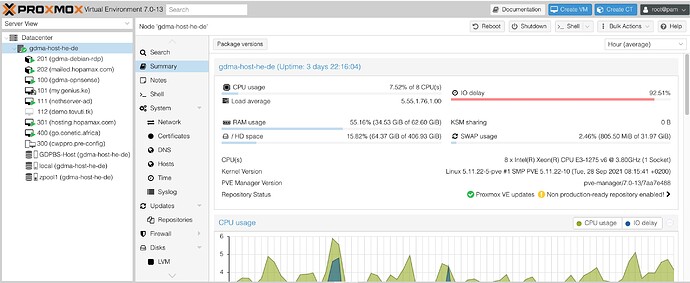

One of the larges sites has about 35 employees, using NethServer as AD, fileserver, NextCloud, Zabbix and more. This is running on a fairly powerful HP Proliant ML380 Gen10 Server. The VMs are all stored on a Synology DS1817+ NAS with Disks in HybridRAID.

This NethServer is actually used as AD from three sites, connected via VPN.

No performance issues…

My Personal Suggestion:

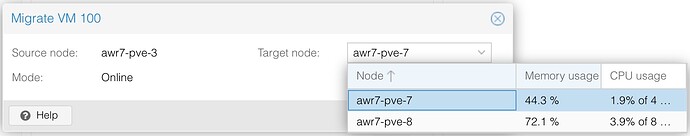

Connect a “decent” NAS (It does not need to be dedicated for Proxmox with lower usage!) to your LAN - and directly to a seperate NIC in your Proxmox - or via a Switch to all nodes in your cluster.

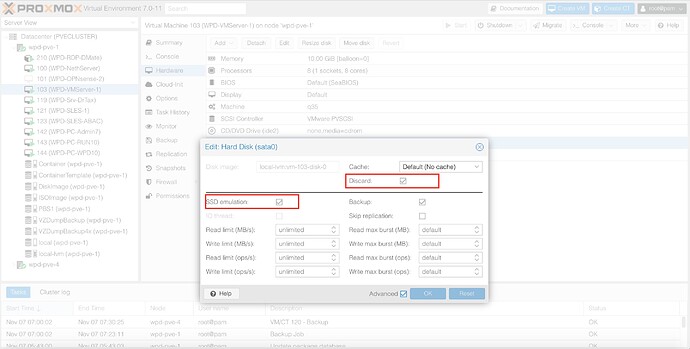

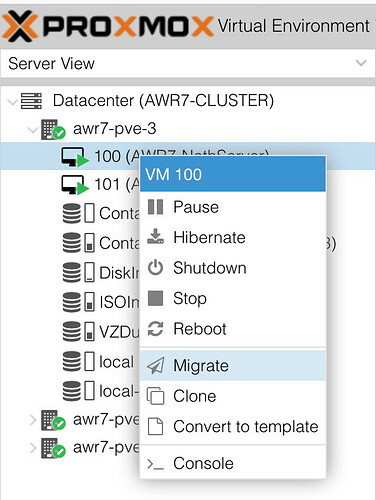

Define this as Storage in Proxmox, and migrate your VM disk there.

Start the VM and observe! (CPU, Load, etc over a cople of days, and give some Feedback!

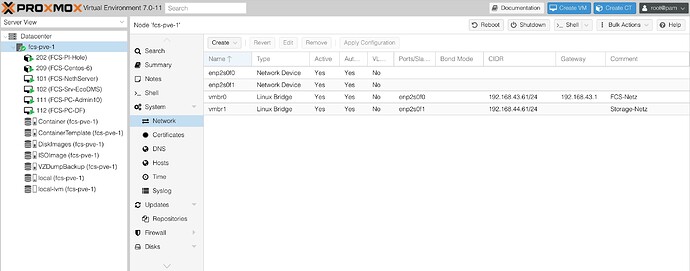

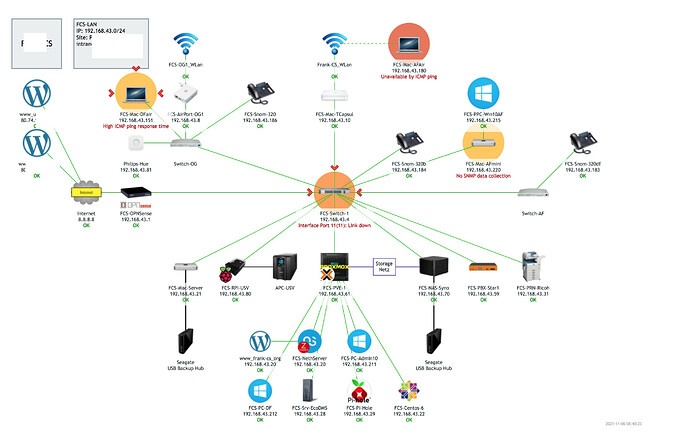

This is the network of a smaller company using a HP Microserver Gen 10 as Proxmox.

The Server has only 2 small SSDs, used as System disks, all Storage is on NAS.

The NAS is also used for other stuff…

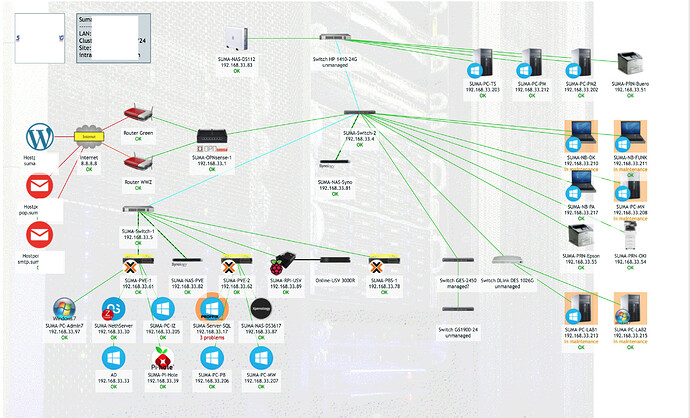

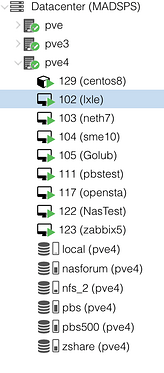

A slightly larger network, using 2 proxmox in Cluster, a dedicated Synology NAS-PVE, and a seperate PBS (Proxmox Backup Server). There is a seperate (same modell) NAS for general usage, also for NethServer backups.

All Storage still done on disks, not SSDs.

Only PBS and Proxmox have SSD for the OS.

Proxmox have a BONDED LAN, a Storage Network and a Cluster Network.

Backups are done to PBS over the bonded LAN.

→ Extra Note:

The Synologys are all set to do updates (Not Upgrades) - over the Weekend.

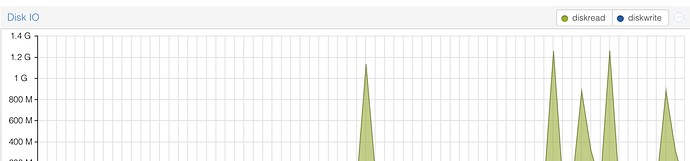

No VMs are ever shut down during this.

No issues in 5 years - Proxmox “caches” the disks of the VMs for this time.

My 2 cents

Andy