@capote

Hi Marko

High time, that you activate this…

-> Fast Migration Cluster

Requirement: All Proxmox are in the same Network (No other requirements!)

All three should be at the same update level, or at least very close.

Next step is to create the cluster.

Here from my personal Proxmox Cheat-List:

Create the cluster

Login via ssh to the first Proxmox VE node. Use a unique name for your cluster, this name cannot be changed later.

Create:

hp1# pvecm create YOUR-CLUSTER-NAME

pvecm create PVE-CLUST

To check the state of cluster:

hp1# pvecm status

Adding nodes to the Cluster

Login via ssh to the other Proxmox VE nodes. Please note, the nodes cannot hold any VM. (If yes you will get conflicts with identical VMID¥s - to workaround, use vzdump to backup and to restore to a different VMID after the cluster configuration).

WARNING: Adding a node to the cluster will delete it’s current /etc/pve/storage.cfg. If you have VMs stored on the node, be prepared to add back your storage locations if necessary. Even though the storage locations disappear from the GUI, your data is still there.

Add the current node to the cluster:

hp2# pvecm add IP-ADDRESS-CLUSTER

For IP-ADDRESS-CLUSTER use an IP from an existing cluster node.

To check the state of cluster:

hp2# pvecm status

Do this for all cluster members.

If a VM is locked, you can unlock it with:

qm unlock VMID

(Number)

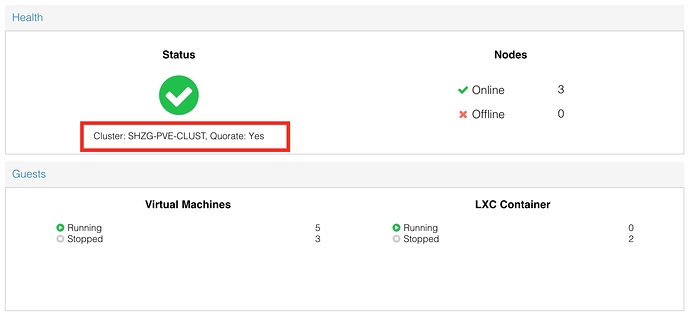

Now you have a cluster which can live migrate any VMs!

A live migration, depending on RAM, takes about 90 seconds if using shared storage (VMs are on NAS / SAN) !!!

Try it, it’s that easy!

Andy