While putting Ns8-copyparty app to some good work, i have bumped into an issue transferring big files (+6Gb). I am using rclone to sync large data sets.

I have a file that is 13.5Gb… and about 6.5Gb into the transfer things start to fail.

Output from a failing rclone session. rclone attempts retry when things start to go wrong, before i hit the ctrl-c to abort the mess.

2025/11/02 15:42:45 DEBUG : rclone: Version "v1.71.2" starting with parameters ["/snap/rclone/561/bin/rclone" "sync" "bigfile" "cpp2:bigfile" "--progress" "-vv"]

2025/11/02 15:42:45 DEBUG : Creating backend with remote "bigfile"

2025/11/02 15:42:45 DEBUG : Using config file from "/home/user/snap/rclone/561/.config/rclone/rclone.conf"

2025/11/02 15:42:45 DEBUG : fs cache: renaming cache item "bigfile" to be canonical "/home/user/Downloads/cpp/bigfile"

2025/11/02 15:42:45 DEBUG : Creating backend with remote "cpp2:bigfile"

2025/11/02 15:42:45 DEBUG : found headers:

2025/11/02 15:42:45 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.idx: Need to transfer - File not found at Destination

2025/11/02 15:42:45 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.pack: Need to transfer - File not found at Destination

2025/11/02 15:42:45 DEBUG : webdav root 'bigfile': Waiting for checks to finish

2025/11/02 15:42:45 DEBUG : webdav root 'bigfile': Waiting for transfers to finish

2025/11/02 15:42:45 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.idx: Update will use the normal upload strategy (no chunks)

2025/11/02 15:42:45 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.pack: Update will use the normal upload strategy (no chunks)

2025/11/02 15:42:46 INFO : pack-53093d7e8541e99543b2f9959492c15df175d5db.idx: Copied (new)

2025/11/02 15:43:47 ERROR : pack-53093d7e8541e99543b2f9959492c15df175d5db.pack: Failed to copy: unchunked simple update failed: Client Closed Request: 499 status code 499

2025/11/02 15:43:47 ERROR : webdav root 'bigfile': not deleting files as there were IO errors

2025/11/02 15:43:47 ERROR : webdav root 'bigfile': not deleting directories as there were IO errors

2025/11/02 15:43:47 ERROR : Attempt 1/3 failed with 1 errors and: unchunked simple update failed: Client Closed Request: 499 status code 499

2025/11/02 15:43:47 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.pack: Need to transfer - File not found at Destination

2025/11/02 15:43:47 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.idx: Sizes identical

2025/11/02 15:43:47 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.idx: Unchanged skipping

2025/11/02 15:43:47 DEBUG : webdav root 'bigfile': Waiting for checks to finish

2025/11/02 15:43:47 DEBUG : webdav root 'bigfile': Waiting for transfers to finish

2025/11/02 15:43:47 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.pack: Update will use the normal upload strategy (no chunks)

2025/11/02 15:44:47 DEBUG : pacer: low level retry 1/1 (error Gateway Timeout: 504 Gateway Timeout)

2025/11/02 15:44:47 DEBUG : pacer: Rate limited, increasing sleep to 20ms

2025/11/02 15:44:48 DEBUG : pacer: Reducing sleep to 15ms

2025/11/02 15:44:48 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.pack: Received error: unchunked simple update failed: Gateway Timeout: 504 Gateway Timeout - low level retry 0/10

2025/11/02 15:44:48 DEBUG : pacer: Reducing sleep to 11.25ms

2025/11/02 15:44:48 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.pack: Update will use the normal upload strategy (no chunks)

Transferred: 13.471 GiB / 26.671 GiB, 51%, 103.827 MiB/s, ETA 2m10s

Checks: 1 / 1, 100%, Listed 5

Transferred: 1 / 2, 50%

Elapsed time: 2m6.5s

Transferring:

* pack-53093d7e8541e9954…59492c15df175d5db.pack: 2% /13.565Gi, 111.633Mi/s, 2m1s^C

On NS8 the log looks like:

nov 02 15:44:45 ns8-test2 traefik[1222]: 192.168.10.246 - - [02/Nov/2025:14:44:45 +0000] "GET / HTTP/1.1" 200 5058 "-" "-" 2379 "copyparty3-http@file" "http://127.0.0.1:20001" 0ms

nov 02 15:44:46 ns8-test2 traefik[1222]: 192.168.10.246 - - [02/Nov/2025:14:44:46 +0000] "GET /?tree=&dots HTTP/1.1" 200 18 "-" "-" 2380 "copyparty3-http@file" "http://127.0.0.1:20001" 0ms

nov 02 15:44:47 ns8-test2 traefik[1222]: 192.168.10.246 - - [02/Nov/2025:14:43:47 +0000] "PUT /bigfile/pack-53093d7e8541e99543b2f9959492c15df175d5db.pack HTTP/1.1" 504 15 "-" "-" 2370 "copy

party3-http@file" "http://127.0.0.1:20001" 60000ms

nov 02 15:44:48 ns8-test2 traefik[1222]: 192.168.10.246 - - [02/Nov/2025:14:44:48 +0000] "DELETE /bigfile/pack-53093d7e8541e99543b2f9959492c15df175d5db.pack HTTP/1.1" 200 35 "-" "-" 2381 "c

opyparty3-http@file" "http://127.0.0.1:20001" 321ms

nov 02 15:44:48 ns8-test2 traefik[1222]: 192.168.10.246 - - [02/Nov/2025:14:44:48 +0000] "MKCOL /bigfile/ HTTP/1.1" 405 0 "-" "-" 2382 "copyparty3-http@file" "http://127.0.0.1:20001" 0ms

nov 02 15:44:52 ns8-test2 traefik[1222]: 192.168.10.246 - - [02/Nov/2025:14:44:48 +0000] "PUT /bigfile/pack-53093d7e8541e99543b2f9959492c15df175d5db.pack HTTP/1.1" 502 11 "-" "-" 2383 "copy

party3-http@file" "http://127.0.0.1:20001" 3649ms

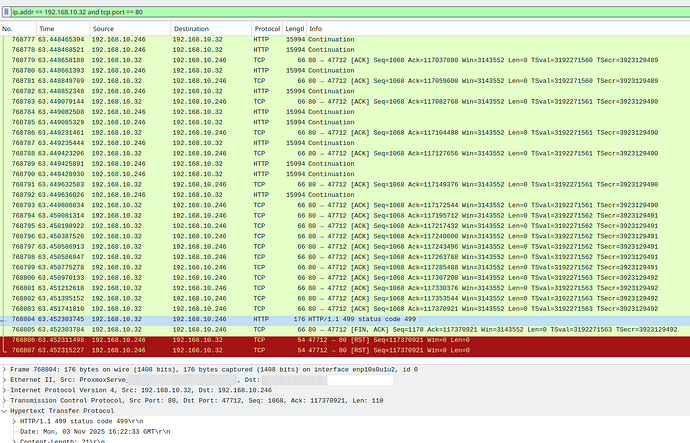

I have build a variant of the copyparty module that does not rely on the reverse proxy and there for using direct port mapping. Using this works flawlessly, as can be seen below.

ownloads/cpp$ rclone sync bigfile ns8-test2:bigfile --progress -vv

2025/11/02 16:07:43 DEBUG : rclone: Version "v1.71.2" starting with parameters ["/snap/rclone/561/bin/rclone" "sync" "bigfile" "ns8-test2:bigfile" "--progress" "-vv"]

2025/11/02 16:07:43 DEBUG : Creating backend with remote "bigfile"

2025/11/02 16:07:43 DEBUG : Using config file from "/home/user/snap/rclone/561/.config/rclone/rclone.conf"

2025/11/02 16:07:43 DEBUG : fs cache: renaming cache item "bigfile" to be canonical "/home/user/Downloads/cpp/bigfile"

2025/11/02 16:07:43 DEBUG : Creating backend with remote "ns8-test2:bigfile"

2025/11/02 16:07:43 DEBUG : found headers:

2025/11/02 16:07:43 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.pack: Need to transfer - File not found at Destination

2025/11/02 16:07:43 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.idx: Sizes identical

2025/11/02 16:07:43 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.idx: Unchanged skipping

2025/11/02 16:07:43 DEBUG : webdav root 'bigfile': Waiting for checks to finish

2025/11/02 16:07:43 DEBUG : webdav root 'bigfile': Waiting for transfers to finish

2025/11/02 16:07:43 DEBUG : pack-53093d7e8541e99543b2f9959492c15df175d5db.pack: Update will use the normal upload strategy (no chunks)

2025/11/02 16:09:48 INFO : pack-53093d7e8541e99543b2f9959492c15df175d5db.pack: Copied (new)

2025/11/02 16:09:48 DEBUG : Waiting for deletions to finish

Transferred: 13.565 GiB / 13.565 GiB, 100%, 111.577 MiB/s, ETA 0s

Checks: 1 / 1, 100%, Listed 3

Transferred: 1 / 1, 100%

Elapsed time: 2m4.4s

2025/11/02 16:09:48 INFO :

Transferred: 13.565 GiB / 13.565 GiB, 100%, 111.577 MiB/s, ETA 0s

Checks: 1 / 1, 100%, Listed 3

Transferred: 1 / 1, 100%

Elapsed time: 2m4.4s

For a second opinion i installed the ns8-webserver which also relies on the reverse proxy module and contains a sftpgo container for uploading the content.

Here the transfer fails too.

These indications points towards the traefik proxy as being unhappy about big file transfers.

When time allows i will probably go and fetch copy of traefik and make it work outside NS8 and see how it behaves.

In the mean time, does someone have any thoughts about what is going on here?