Well thought out!

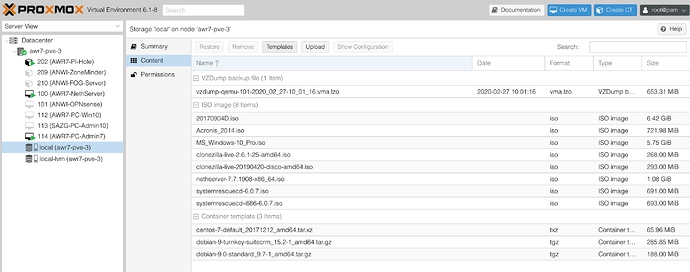

Doing backups for all VMs is never a bad idea:

Better to have one backup too many, than one too little!

I do mine automatically every night, a few are done twice daily, once at lunch, once after work.

Doing Backups CAN take a bit longer, the 15 mins was referring to making the cluster and rebooting.

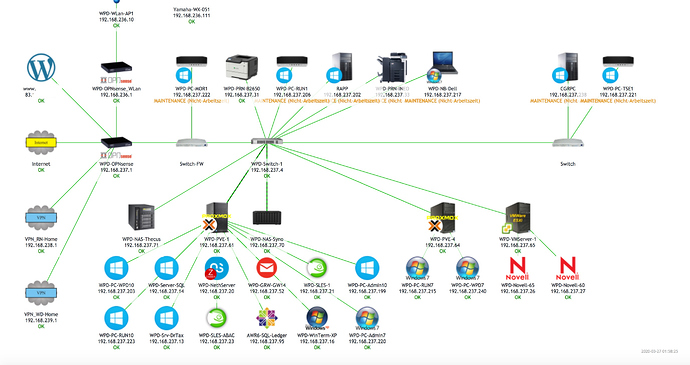

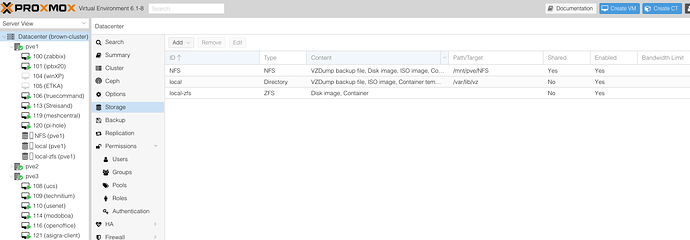

If you DO something, think it out first and do it well.

If you want to set up a cluster system, than do it in a way that you have the potential of 5-10 years of “carefree” running, at least on the Proxmox level!

Then it’s worth your while, and you honestly earned yourself a beer or whatever your preference.

Most of my newer Proxmox have only 2x 250 GB SSDs or 2x 500 GB SSDs, depending on availability. (300 GB SAS…)

A little bit of local space isn’t wrong, but too much is definately a waste.

I’d also - depending on your Hardware - also install LM-Sensors, smartmontools (or equivalent), dmidecode (Good for say HP-ROK MS Licensing or similiar)…

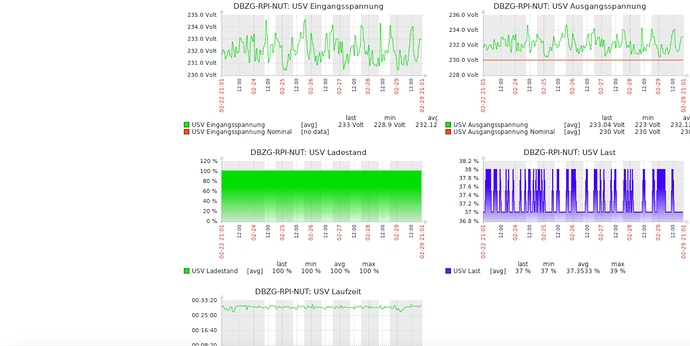

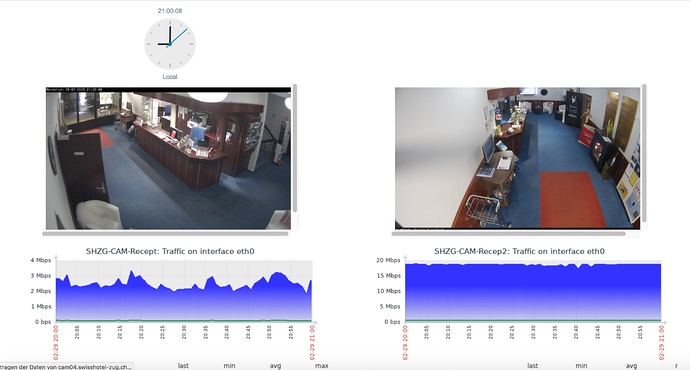

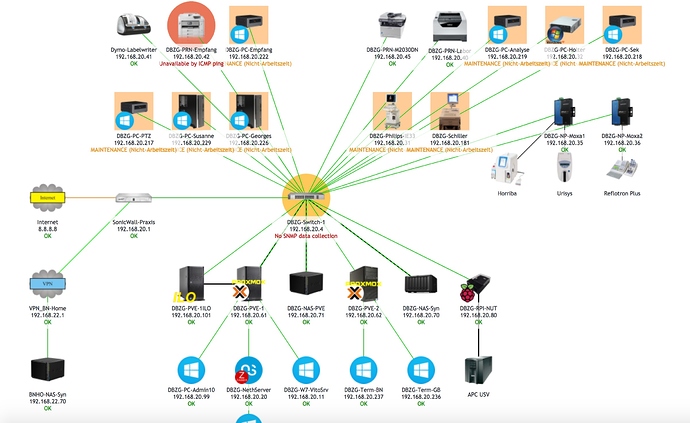

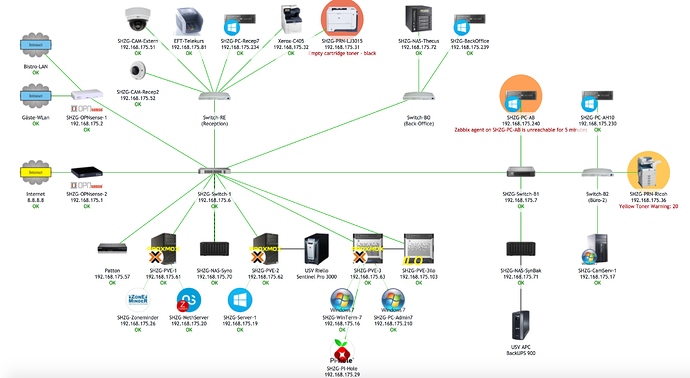

SNMP and Zabbix Agents are also a must in my environments…

I wouldn’t want to be driving a car without a speedometer (or no oil/gas guage), chances nowadays you won’t get a fine, you might lose your driving license due to speeding. So monitoring system vitals is a must!

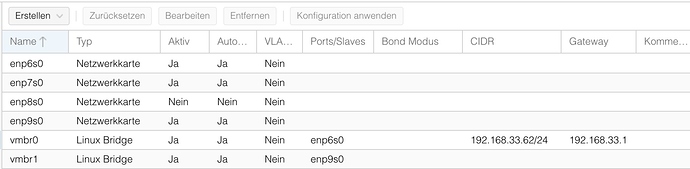

Both Proxmox AND your NAS equipped with 10GB NICs! You lucky guy!

Only had 2 clients with that kind of NICs…

My 2 cents

Andy