Hi everyone… I think I’m going to have to bin NS8.

Did a restart today of the proxmox system, and now I get this, error (even after rolling back I get the same error. It occurs once memory has been cleared, or a full reboot).

{"context":{"action":"list-domain-groups","data":{"domain":"ad.flashelectrical.co.nz"},"extra":{"eventId":"72007e69-2d58-4e07-93da-582bf8e317cc","isNotificationHidden":true,"title":"List domain groups"},"id":"d5fa6924-8644-42fa-a691-0353f333d51c","parent":"","queue":"cluster/tasks","timestamp":"2024-01-01T22:01:56.77019952Z","user":"admin"},"status":"aborted","progress":100,"subTasks":[],"validated":false,"result":{"error":"Traceback (most recent call last):\n File \"/var/lib/nethserver/cluster/actions/list-domain-groups/50list_groups\", line 33, in <module>\n groups = Ldapclient.factory(**domain).list_groups()\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \"/usr/local/agent/pypkg/agent/ldapclient/__init__.py\", line 29, in factory\n return LdapclientAd(**kwargs)\n ^^^^^^^^^^^^^^^^^^^^^^\n File \"/usr/local/agent/pypkg/agent/ldapclient/base.py\", line 37, in __init__\n self.ldapconn = ldap3.Connection(self.ldapsrv,\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \"/usr/local/agent/pyenv/lib64/python3.11/site-packages/ldap3/core/connection.py\", line 363, in __init__\n self._do_auto_bind()\n File \"/usr/local/agent/pyenv/lib64/python3.11/site-packages/ldap3/core/connection.py\", line 389, in _do_auto_bind\n self.bind(read_server_info=True)\n File \"/usr/local/agent/pyenv/lib64/python3.11/site-packages/ldap3/core/connection.py\", line 607, in bind\n response = self.post_send_single_response(self.send('bindRequest', request, controls))\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \"/usr/local/agent/pyenv/lib64/python3.11/site-packages/ldap3/strategy/sync.py\", line 160, in post_send_single_response\n responses, result = self.get_response(message_id)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \"/usr/local/agent/pyenv/lib64/python3.11/site-packages/ldap3/strategy/base.py\", line 370, in get_response\n raise LDAPSessionTerminatedByServerError(self.connection.last_error)\nldap3.core.exceptions.LDAPSessionTerminatedByServerError: session terminated by server\n","exit_code":1,"file":"task/cluster/d5fa6924-8644-42fa-a691-0353f333d51c","output":""}}

Considering the hours I have spent manually migrating over to NS8 due to the NS8 migration tool not working correctly… a couple of errors which I resolved (having to remove webtop even though I had correct DNS and a virtual hostname assigned on old server and dns correct, I got an error saying no virtual host). Then migration worked (all green ticks), but the following day all the migration had been deleted by the sync script, software uninstalled and then, as I had a bear metal server (unable to install proxmox at the time due to hardware being too new), I was unable to re-do migration of server even after following the re-enable services steps on github. I could migrate nextcloud but all other options failed, or I was not able to be run (no option to sync or migrate domain or file server).

I can’t login to users, access groups, or shares. I needed this running today. Now I have nothing to show for my time. I have spent all Christmas Holidays setting up two servers and both are now defunct.

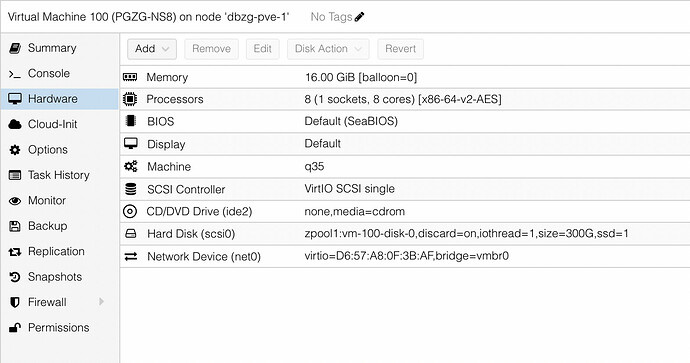

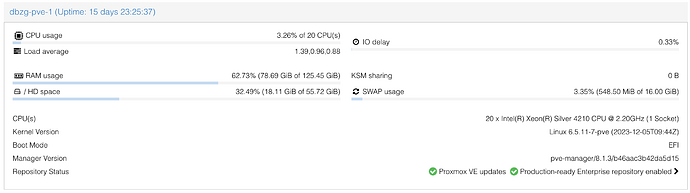

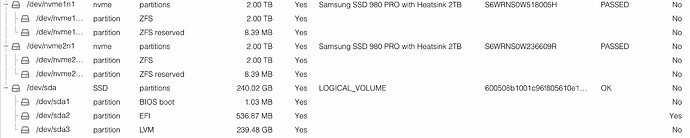

Question Where is the LDAP and how do I find it to debug this? Is it a corrupt database? I highly doubt it, but one never knows. Both servers could have decided to corrupt the ZFS disks (even though raid 10)

DNS servers have not changed and are working the same as when I started setup.

Overall I have enjoyed Nethserver and it’s community, and even after checking out UCS/Zentyal/yunohost, Nethserver is still the best option for our business with the least amount of issues. It is a shame that this release of NS8 RC1 is not actually production ready as per the notice. If anyone can help from the design team to point me in the correct direction to fix the above error I’d be extremely grateful, but now I’m taking a backup from a week ago and rolling out 7.9 on the proxmox, which hopefully will work.