NethServer Version: 8.whatever’s current

Module: Backup

I’ve been off for the last few months starting a new graduate program, and had just left my NS7 server doing its thing, but a combination of routing problems to that VPS and problems with NS7 itself (specifically that sssd keeps dying) are pushing me to give the migration another shot. So fresh VPS with Rocky 9, run the install script, initialize the cluster, join my NS7 system to it, migrate the data for Nextcloud and Mail–all seems to work fine. Syncing new data after initializing the migration works fine. Well and good.

What doesn’t seem to be working, though, is the backup. I’ve set up a backup to iDrive e2 as an S3-compatible source, and the setup completes without errors, but the backup doesn’t. And at this point, I have no idea why.

Side rant: If you’re building a product that’s designed to be managed through a web interface, you have to show errors in that web interface.

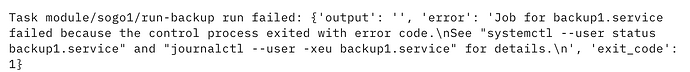

I’d set up the backup to run overnight, and this morning, it didn’t look like it had run. So I clicked to run it now, and in a moment was given a pop-up window saying “Something went wrong,” which is, well, not very informative. But it had a promising link for “more information,” which I clicked, and got this:

(with several more subtasks also listed as failed). This is 0% helpful; I already know it didn’t work due to the last message. But further down that form there’s another “More info” toggle, which reveals this:

(repeated for each of the apps I’m trying to back up). There’s also a link to “copy task trace” (not to show it, mind you, just to copy it to the clipboard), which copies this (which I’ve run through an online JSON parser so it isn’t all on one giant line):

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"backupName": "Backup to iDrive e2",

"completion": {

"extraTextParams": [

"backupName"

],

"i18nString": "backup.backup_completed_successfully"

},

"description": "Running Backup to iDrive e2",

"title": "Run backup"

},

"id": "25053d66-a5ed-4d47-9a8e-00a23f5231f0",

"parent": "",

"queue": "cluster/tasks",

"timestamp": "2024-11-22T12:07:30.994203545Z",

"user": "admin"

},

"status": "aborted",

"progress": 1,

"subTasks": [

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/sogo1/run-backup"

},

"id": "42e05ef9-93db-4955-940f-00d07c55f376",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1.service failed because the control process exited with error code.\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/sogo1/42e05ef9-93db-4955-940f-00d07c55f376",

"output": ""

}

},

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/mariadb1/run-backup"

},

"id": "b5b167ee-1f63-4aeb-8f56-19f2020f5bb2",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1.service failed because the control process exited with error code.\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/mariadb1/b5b167ee-1f63-4aeb-8f56-19f2020f5bb2",

"output": ""

}

},

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/loki1/run-backup"

},

"id": "604cadb7-9254-4eb0-94c2-bbcdfc3f30e7",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1.service failed because the control process exited with error code.\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/loki1/604cadb7-9254-4eb0-94c2-bbcdfc3f30e7",

"output": ""

}

},

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/mail1/run-backup"

},

"id": "f4f01adb-c587-4ba1-9109-406e83ab5614",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1.service failed because the control process exited with error code.\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/mail1/f4f01adb-c587-4ba1-9109-406e83ab5614",

"output": ""

}

},

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/grafana1/run-backup"

},

"id": "ddca1edd-7802-44b0-bda7-115678d79d97",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1.service failed because the control process exited with error code.\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/grafana1/ddca1edd-7802-44b0-bda7-115678d79d97",

"output": ""

}

},

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/nextcloud1/run-backup"

},

"id": "14dd3d7b-728e-4710-a739-a39cf7feaf27",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1.service failed because the control process exited with error code.\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/nextcloud1/14dd3d7b-728e-4710-a739-a39cf7feaf27",

"output": ""

}

},

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/crowdsec1/run-backup"

},

"id": "b44d7224-82cc-4621-99b6-6d2fd6f9e575",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1-crowdsec1.service failed because the control process exited with error code.\nSee \"systemctl status backup1-crowdsec1.service\" and \"journalctl -xeu backup1-crowdsec1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/crowdsec1/b44d7224-82cc-4621-99b6-6d2fd6f9e575",

"output": ""

}

},

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/traefik1/run-backup"

},

"id": "27d554ee-5001-42e4-afd4-e20f7f125288",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1.service failed because the control process exited with error code.\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/traefik1/27d554ee-5001-42e4-afd4-e20f7f125288",

"output": ""

}

},

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/collabora1/run-backup"

},

"id": "7a95556d-f51a-4068-8f6d-c7535e1c9cc5",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1.service failed because the control process exited with error code.\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/collabora1/7a95556d-f51a-4068-8f6d-c7535e1c9cc5",

"output": ""

}

},

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/webserver1/run-backup"

},

"id": "a741c038-3a56-49f9-8a6f-1458b1dce0de",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1.service failed because the control process exited with error code.\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/webserver1/a741c038-3a56-49f9-8a6f-1458b1dce0de",

"output": ""

}

},

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/roundcubemail1/run-backup"

},

"id": "3b99161e-a142-4d5d-9d03-fb3879f7a89b",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1.service failed because the control process exited with error code.\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/roundcubemail1/3b99161e-a142-4d5d-9d03-fb3879f7a89b",

"output": ""

}

},

{

"context": {

"action": "run-backup",

"data": {

"id": 1

},

"extra": {

"description": "run-backup agent action",

"isNotificationHidden": true,

"title": "module/prometheus1/run-backup"

},

"id": "f1e22c0a-7ba4-4cb8-8130-fd118f007711",

"parent": "25053d66-a5ed-4d47-9a8e-00a23f5231f0"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"result": {

"error": "Job for backup1.service failed because the control process exited with error code.\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\n",

"exit_code": 1,

"file": "task/module/prometheus1/f1e22c0a-7ba4-4cb8-8130-fd118f007711",

"output": ""

}

}

],

"validated": true,

"result": {

"error": "Task module/sogo1/run-backup run failed: {'output': '', 'error': 'Job for backup1.service failed because the control process exited with error code.\\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\\n', 'exit_code': 1}\nTask module/nextcloud1/run-backup run failed: {'output': '', 'error': 'Job for backup1.service failed because the control process exited with error code.\\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\\n', 'exit_code': 1}\nTask module/traefik1/run-backup run failed: {'output': '', 'error': 'Job for backup1.service failed because the control process exited with error code.\\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\\n', 'exit_code': 1}\nTask module/collabora1/run-backup run failed: {'output': '', 'error': 'Job for backup1.service failed because the control process exited with error code.\\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\\n', 'exit_code': 1}\nTask module/webserver1/run-backup run failed: {'output': '', 'error': 'Job for backup1.service failed because the control process exited with error code.\\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\\n', 'exit_code': 1}\nTask module/crowdsec1/run-backup run failed: {'output': '', 'error': 'Job for backup1-crowdsec1.service failed because the control process exited with error code.\\nSee \"systemctl status backup1-crowdsec1.service\" and \"journalctl -xeu backup1-crowdsec1.service\" for details.\\n', 'exit_code': 1}\nTask module/loki1/run-backup run failed: {'output': '', 'error': 'Job for backup1.service failed because the control process exited with error code.\\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\\n', 'exit_code': 1}\nTask module/prometheus1/run-backup run failed: {'output': '', 'error': 'Job for backup1.service failed because the control process exited with error code.\\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\\n', 'exit_code': 1}\nTask module/grafana1/run-backup run failed: {'output': '', 'error': 'Job for backup1.service failed because the control process exited with error code.\\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\\n', 'exit_code': 1}\nTask module/roundcubemail1/run-backup run failed: {'output': '', 'error': 'Job for backup1.service failed because the control process exited with error code.\\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\\n', 'exit_code': 1}\nTask module/mariadb1/run-backup run failed: {'output': '', 'error': 'Job for backup1.service failed because the control process exited with error code.\\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\\n', 'exit_code': 1}\nTask module/mail1/run-backup run failed: {'output': '', 'error': 'Job for backup1.service failed because the control process exited with error code.\\nSee \"systemctl --user status backup1.service\" and \"journalctl --user -xeu backup1.service\" for details.\\n', 'exit_code': 1}\n12\n",

"exit_code": 1,

"file": "task/cluster/25053d66-a5ed-4d47-9a8e-00a23f5231f0",

"output": ""

}

}

So I’m four clicks into trying to figure out what went wrong, I’ve exhausted what the GUI can (apparently) tell me, and all I know is “the control process exited with error code,” and that it’s sending me to the shell to find out more.

So to the shell I go:

[root@ns8 ~]# systemctl --user status backup1.service

Failed to connect to bus: No medium found

[root@ns8 ~]# journalctl --user -xeu backup1.service

No journal files were found.