All right… let’s play…

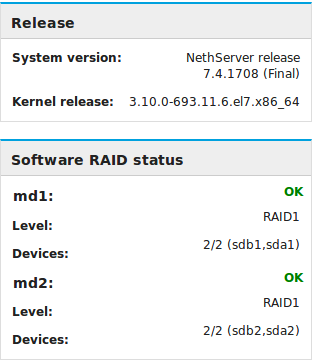

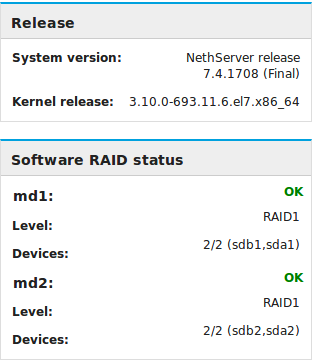

I installed a NS7 with default settings in unattended mode. This way the 2 disks I created in VBox were automagically configured as MDADM Raid1

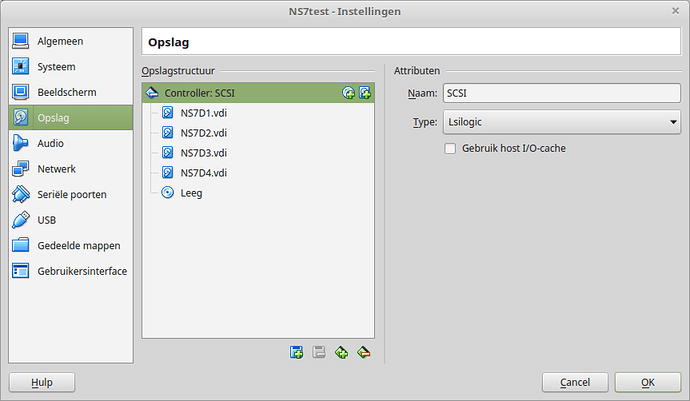

Now I bring the VM down and add 2 more VDisks:

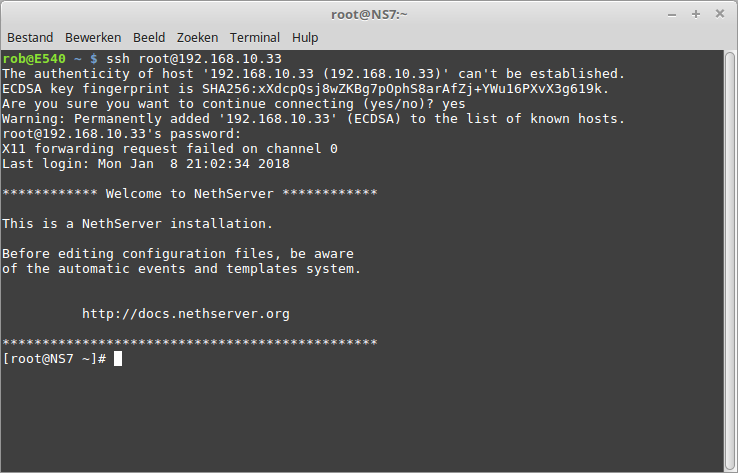

And spin up the VM again and I ssh into the VM:

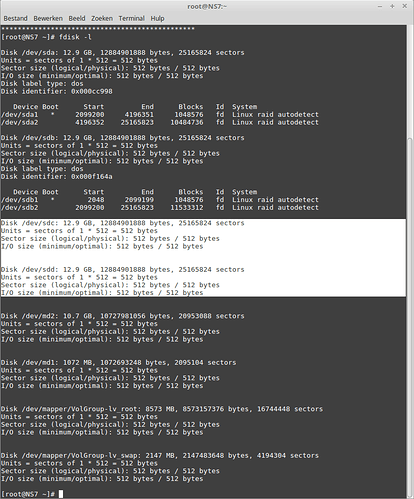

First I check the disks with

fdisk -l

The highlighted rows are the 2 more added disks: /dev/sdc and /dev/sdd

/dev/sdc and /dev/sdd after replicating from /dev/sda and /dev/sdb

Disk /dev/sdc: 12.9 GB, 12884901888 bytes, 25165824 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x00000000

Device Boot Start End Blocks Id System

/dev/sdc1 * 2099200 4196351 1048576 fd Linux raid autodetect

/dev/sdc2 4196352 25165823 10484736 fd Linux raid autodetect

Disk /dev/sdd: 12.9 GB, 12884901888 bytes, 25165824 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x00000000

Device Boot Start End Blocks Id System

/dev/sdd1 * 2099200 4196351 1048576 fd Linux raid autodetect

/dev/sdd2 4196352 25165823 10484736 fd Linux raid autodetect

add them to raidset:

[root@NS7 ~]# mdadm /dev/md1 --add /dev/sdc1 /dev/sdd1

mdadm: added /dev/sdc1

mdadm: added /dev/sdd1

[root@NS7 ~]# mdadm /dev/md2 --add /dev/sdc2 /dev/sdd2

mdadm: added /dev/sdc2

mdadm: added /dev/sdd2

Changing raidlvl:

[root@NS7 ~]# mdadm --grow /dev/md1 --level=1 --raid-disks=4

raid_disks for /dev/md1 set to 4

[root@NS7 ~]# mdadm --grow /dev/md2 --level=5 --raid-disks=4

mdadm: level of /dev/md2 changed to raid5

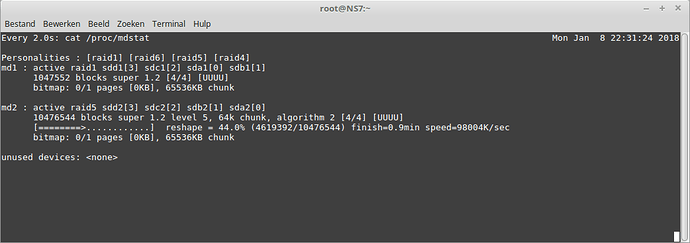

Watching the raidset build:

([ctrl]-c to exit)

pvresize /dev/md2 gives:

[root@NS7 ~]# pvresize /dev/md2

Physical volume “/dev/md2” changed

1 physical volume(s) resized / 0 physical volume(s) not resized

lvextend --resizefs -l +100%FREE /dev/mapper/VolGroup-lv_root gives:

[root@NS7 ~]# lvextend --resizefs -l +100%FREE /dev/mapper/VolGroup-lv_root

Size of logical volume VolGroup/lv_root changed from 7.98 GiB (2044 extents) to 27.97 GiB (7161 extents).

Logical volume VolGroup/lv_root successfully resized.

meta-data=/dev/mapper/VolGroup-lv_root isize=512 agcount=4, agsize=523264 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0 spinodes=0

data = bsize=4096 blocks=2093056, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

data blocks changed from 2093056 to 7332864

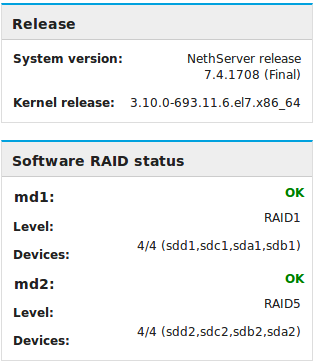

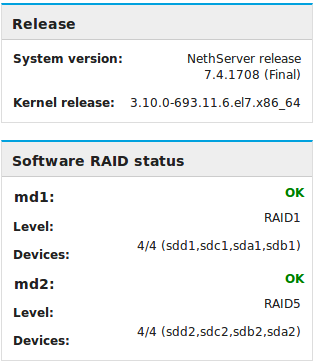

And the big question: what will NetGui Dashboard say?..

Thnx @fausp for this mini tutorial. It works flawlessly…

I added some screens to make it even more clear. Especially for the less tech savvy members of our forums.