Dear Community,

I would like to open up some design decisions for discussion. This time, it’s not primarily about the design decisions made by the Nethserver 8 developers, but about considerations regarding the settings of new overall systems, which in my case regularly rely on VM hosts, virtual machines, and containers.

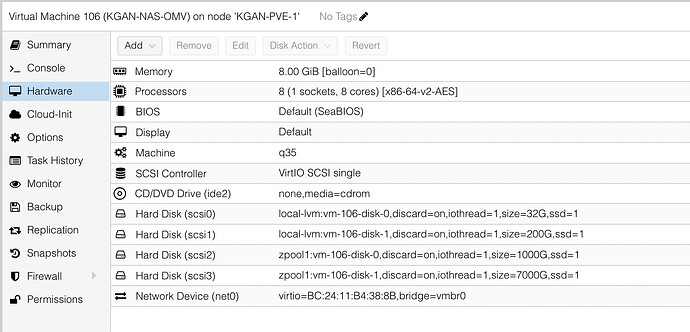

Decision 1 - The VM Host

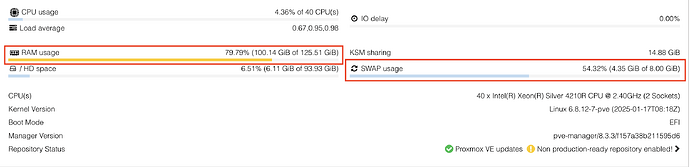

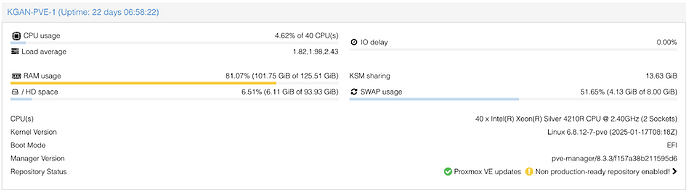

Proxmox VE (PVE) is a fantastic VM host, and when combined with the Proxmox Backup Server (PBS), it truly leaves little to be desired. At least not in the scale of the systems I manage. These are usually single PVE hosts, often with an integrated PBS. I used to work with VMware (and earlier with VirtualBox-based VM hosts), but PVE is the best solution for me, not least because it is highly customizable—just the way I like it in a Linux environment. (My PVE is often a Debian on-top installation).

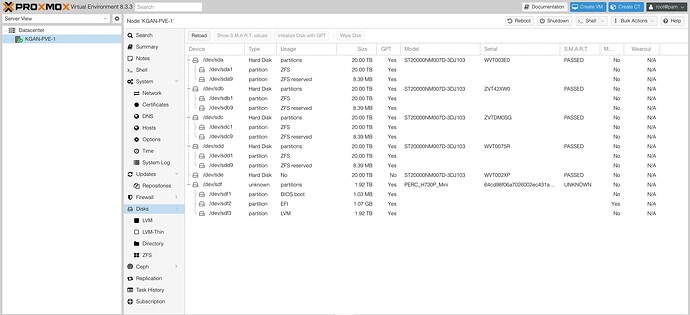

Decision 2 - The Filesystem on the VM Host (Proxmox VE)

The root system is usually ext4, which is perfectly sufficient for me because the system disk is only used for booting and storing configurations. If anything goes wrong here, it can be quickly restored.

What matters more is the filesystem used to store the VM images. This filesystem should at least be encrypted because it often contains sensitive data, and the legal requirements are clear in this regard. Instead of configuring a separate encrypted filesystem for each VM (which would need to be unlocked each time), I decided to encrypt the VM storage using LUKS and unlock it after PVE starts (manually or by another method—without storing the key on the PVE itself). On top of that, an ext4 filesystem is regularly used, which has worked flawlessly so far.

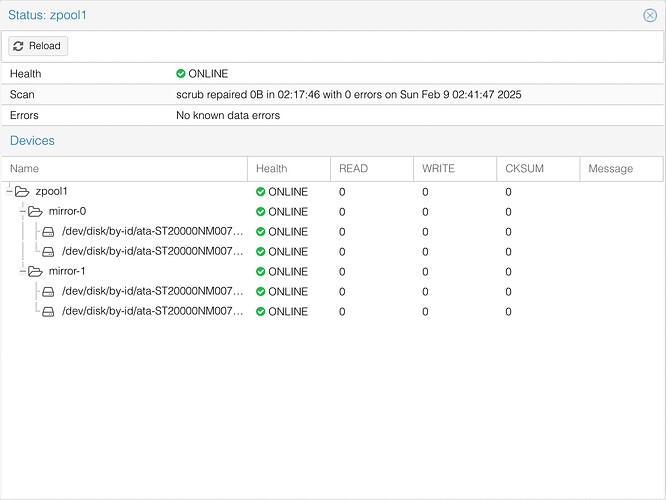

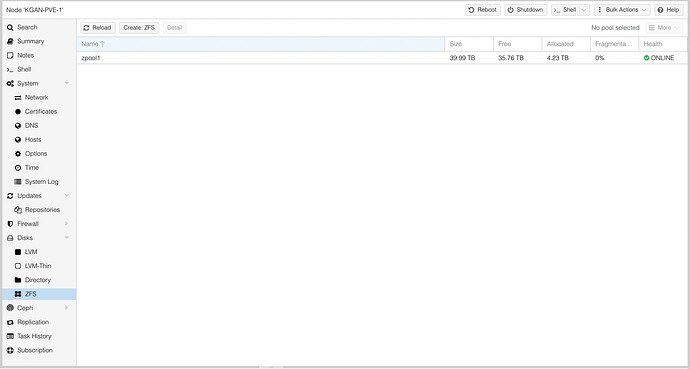

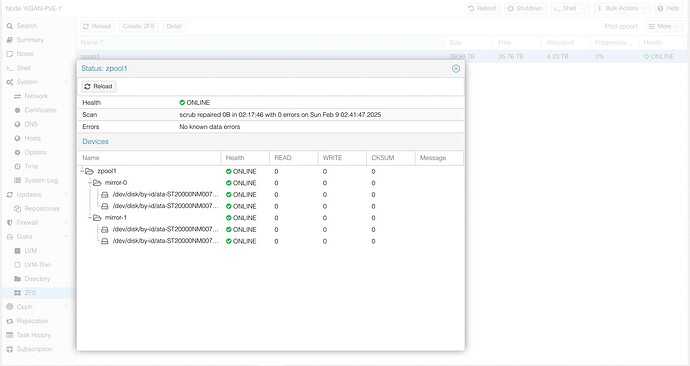

However, it’s always worth considering new methods. ZFS is an interesting option for VM storage, but for my purposes, it is far too complex and resource-intensive. Moreover, I prefer solutions that are traditionally set up (I avoid LVM where possible) and can be manually managed. Additionally, it is said that ZFS does not work well with consumer hardware and can put significant strain on it.

To still take advantage of checksums and a well-functioning RAID1 with reliable “self-healing,” I use a combination of LUKS and BTRFS on top of my current PVE setup. Specifically, this involves two NVMe drives of different types, each encrypted with LUKS. Two BTRFS filesystems were created on these, and they are combined as a BTRFS RAID1 volume. After the PVE starts and the LUKS encryption is unlocked, the BTRFS RAID1 is available and runs smoothly.

Additionally, there is a hard disk set up as a BTRFS single (also on LUKS), which receives daily snapshots from the BTRFS RAID. This way, you can quickly return to a working VM state—also outside of the integrated PVE snapshots. The BTRFS snapshots can be easily written, backlinked, and used (or directly from the backup).

The PBS solution is excellent, especially because you can mirror all backups to a remote PBS. This would even cover regional disaster scenarios—though with long recovery times. Restoring individual files (within the VM) is also possible.

As an additional emergency solution (if you need to access secure data natively without PVE or PBS), there are also regular (incremental) rsync “pull” backups of most VM content. These backups can also be nicely distributed remotely.

This is how I would establish the PVE configuration going forward.

Relation to Nethserver

To maintain the connection to Nethserver, previous Nethserver 7 installations used Restic for network backups (local and remote). Unfortunately, I haven’t found a useful alternative for Nethserver 8 yet. The built-in backup solution is primarily geared towards cloud storage or separately configured S3 alternatives. This is too complex for my purposes. I would prefer a local backup (to a local drive or VM image), from which I could restore containers or migrate to a new node. Unfortunately, this has never worked properly, which has prevented the move to Nethserver 8 so far.

Decision 3 - Filesystems for Nethserver 8

For the new Nethserver, there is also the question of which filesystems to prefer within Nethserver 8 or other (mostly Linux-based) VMs?

Data integrity seems to be fundamentally ensured by the BTRFS RAID1, but if bit errors occur (for whatever reason), they will always be assigned to the entire VM image on the PVE host rather than to individual files.

So, to potentially identify and selectively replace “corrupted” files within a VM, a filesystem that at least keeps track of such errors would be beneficial. Again, BTRFS comes to mind.

For Nethserver 8, this would be feasible for “/home” using a BTRFS formatted disk (VM image).

Unfortunately, it turned out early on that RHEL clones don’t support BTRFS. One could certainly use a different kernel or modules, but would that be a reliable base for a production system?

To natively support BTRFS, one would have to consider a Debian-based system, but my previous experiences with Nethserver 8 on Debian have been less than ideal compared to, for example, Rocky 9.

What have your experiences been with Nethserver 8 on Debian?

For certain (professional) scenarios, a Debian base might not be feasible due to the lack of “subscription” options. Unfortunately, this affects the very configurations for which the considerations mentioned above were made.

Moreover, there are occasional reports of incompatibilities between BTRFS and Docker containers, which would particularly affect Nethserver 8.

What are your experiences with BTRFS in general, or specifically under Nethserver 8? What do the developers say about such a configuration?

Best regards,

Yummiweb