@danb35

That’s what high availability is all about. Besides maintenence, the main reason are hardware failures. At 02:00 in the morning, when Murphy plans most outages, there’s usually no one at the helm. The system is on Autopilot, so to say.

Autopilot needs a few simple rules, to define the when & what happens (Live migration if planned downtime) when a node goes down.

You need to think about it - more if your Proxmox differ in RAM / CPU power. In your case, all 3 are equal, so saves trouble and planning. Your Fencing rules can be kept simple…

Fencing

Fencing is an essential part for Proxmox VE HA (version 2.0 and later), without fencing, HA will not work. REMEMBER: you NEED at least a fencing device for every node. Detailed steps to configure and test fencing can be found here.

Another option is grouping stuff, like in priorities, or resource requirements…

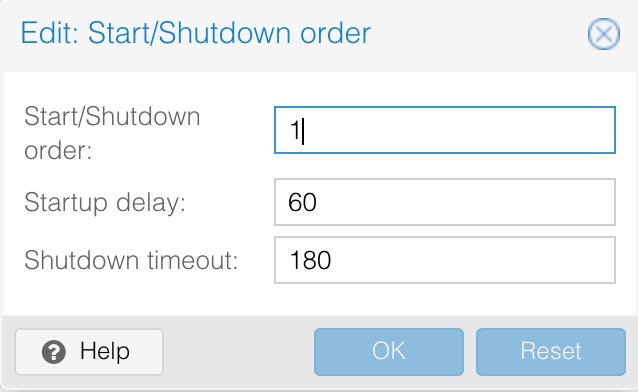

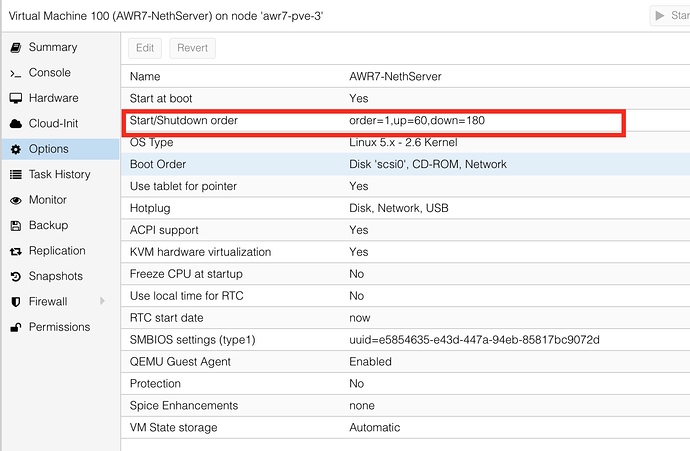

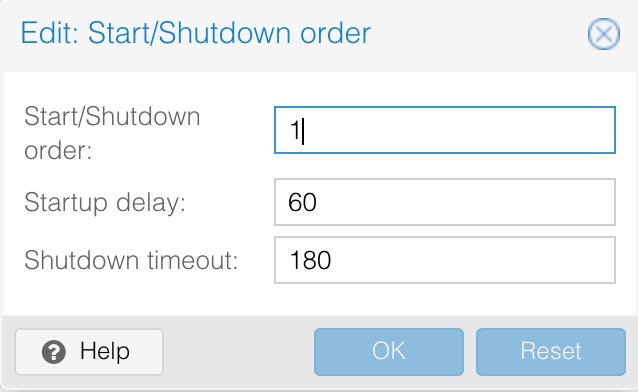

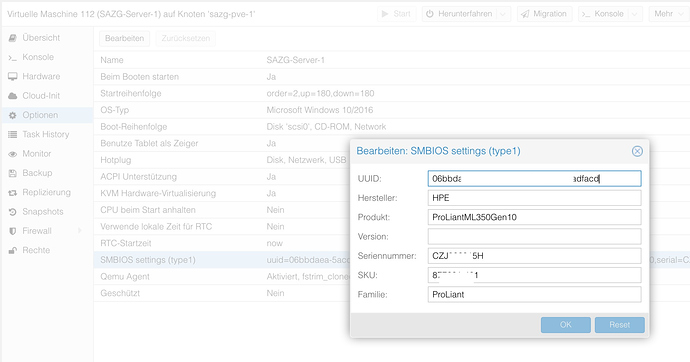

This is one of the most important Settings in achieving this:

The delay / timeout prevent overloading IO at host boot time, and timeout is for shutting down (think UPS!) when an App is hanging or blocking the shutdown…

Have a look at the options, I think you’ld get along with this really fast.

You’re driving a Ferrari now, Dan, and it’s time to shift to fourth gear!

See also:

https://pve.proxmox.com/wiki/High_Availability_Cluster

Note: It will work well with only one network, but a separate cluster network IS better!

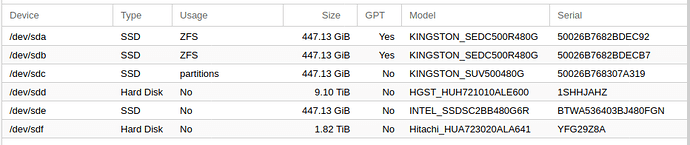

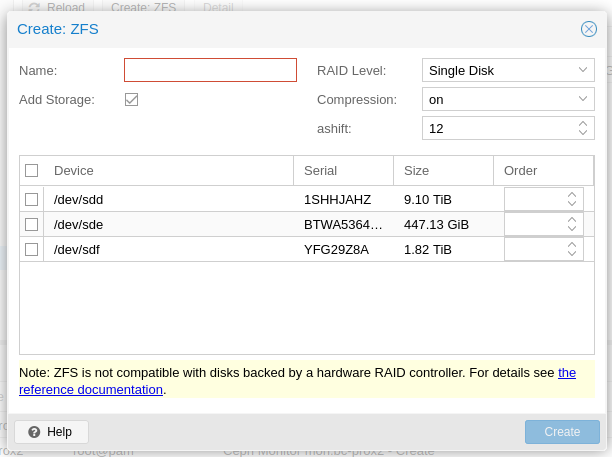

/dev/sdc is missing

/dev/sdc is missing