Hello again, as promised  i’ve installed NS baremetal on a APU2e4 PC Engine Machine, and it seems the issue of pppoe speed it’s more noticeable here:

i’ve installed NS baremetal on a APU2e4 PC Engine Machine, and it seems the issue of pppoe speed it’s more noticeable here:

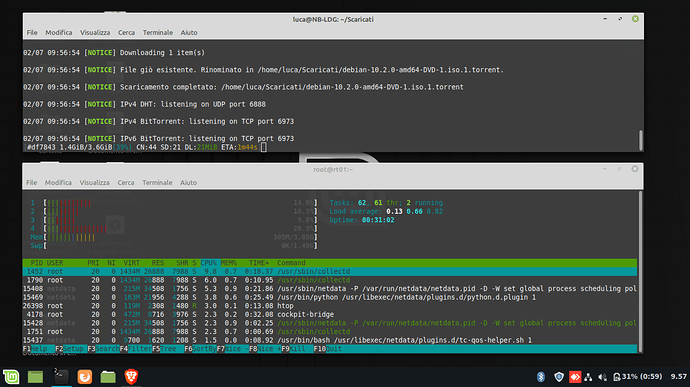

RED Interface as DHCP (pppoe on the fritzbox router):

As you can see i can easily reach around 22MByte/s

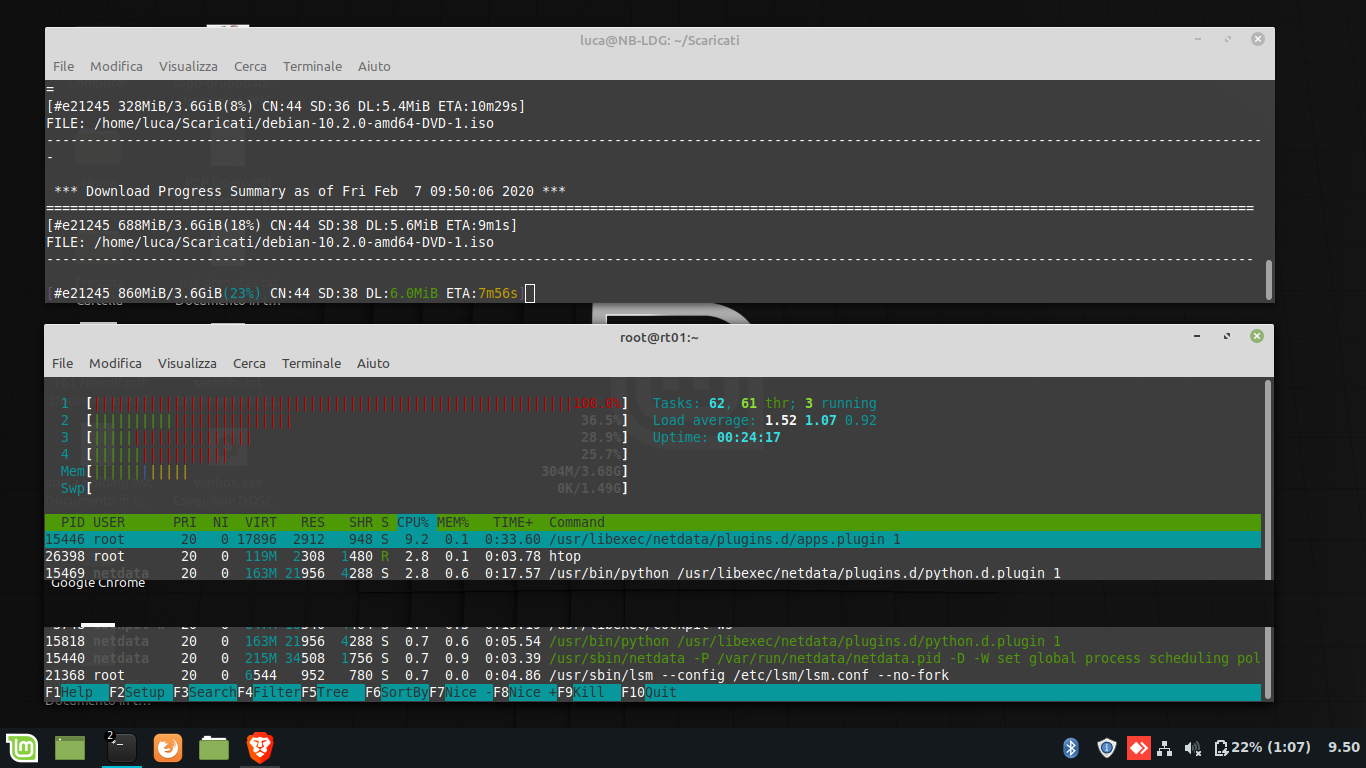

RED interface as PPPoE:

as you can see i can barely hit 6MB/s, and the core skyrocket

The tests were made on a clean installation, with only 1 client connected, since the pppoe process seems to eat a lot of cpu resources, i guess that the speed performance will be even worst in a real world scenario with a lot of services and clients connected

Cheers