I’am testing NS8 Beta 1 with Alma 9 on esxi vm for the use of file server.

Several times a day the acl’s for the Samba shares are lost and have to be programmed again. Any clues?

Thanks for testing!

Did you set the ACLs in the web UI and which ones did you set? I tested the default ACLs (write, readonly, guest) and they still work so far.

Which client OS did you use when connecting to the share?

Yes I used the cluster-admin UI.

created the shares and set the acls via shared folders page.

Because of the errors I during the last effort to set the acls I rebooted the server and now all shares are not available.

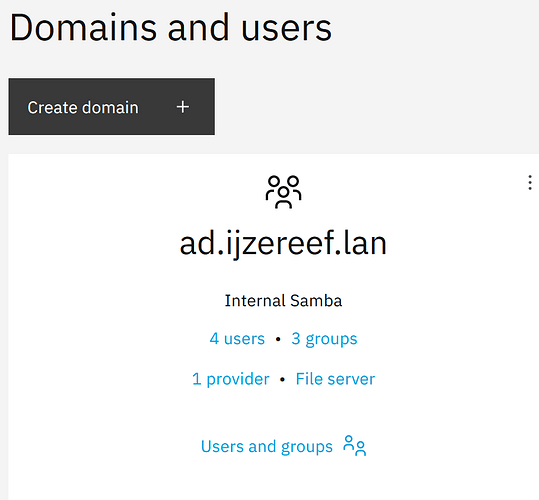

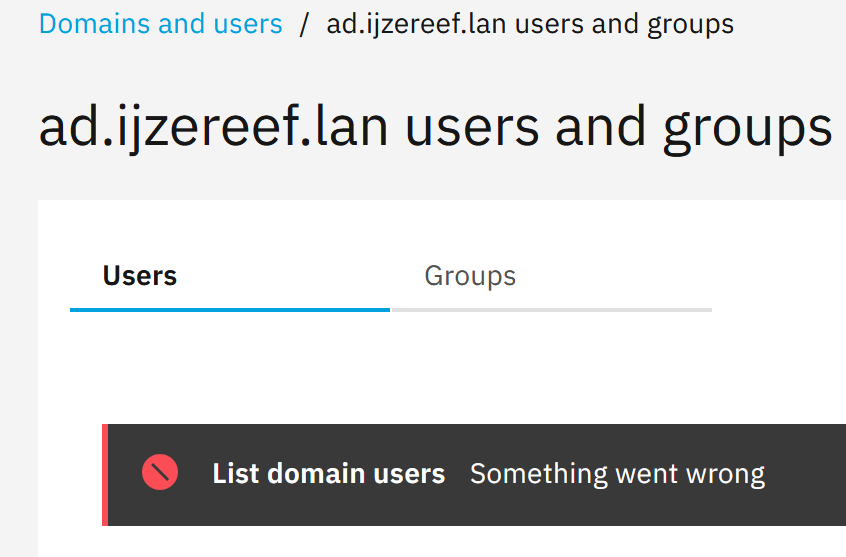

I noticed that all my users are not available anymore and also the shares are not available anymore,

{

"context": {

"action": "list-domain-groups",

"data": {

"domain": "ad.ijzereef.lan"

},

"extra": {

"eventId": "aa8ed9e9-0165-4d82-8757-3357784bc6c2",

"isNotificationHidden": true,

"title": "List domain groups"

},

"id": "2406b111-2dae-4181-97e3-985eee5affc3",

"parent": "",

"queue": "cluster/tasks",

"timestamp": "2023-05-22T20:38:30.196062953Z",

"user": "admin"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"validated": false,

"result": {

"error": "Traceback (most recent call last):\n File \"/var/lib/nethserver/cluster/actions/list-domain-groups/50list_groups\", line 33, in <module>\n groups = Ldapclient.factory(**domain).list_groups()\n File \"/usr/local/agent/pypkg/agent/ldapclient/__init__.py\", line 29, in factory\n return LdapclientAd(**kwargs)\n File \"/usr/local/agent/pypkg/agent/ldapclient/base.py\", line 37, in __init__\n self.ldapconn = ldap3.Connection(self.ldapsrv,\n File \"/usr/local/agent/pyenv/lib64/python3.9/site-packages/ldap3/core/connection.py\", line 363, in __init__\n self._do_auto_bind()\n File \"/usr/local/agent/pyenv/lib64/python3.9/site-packages/ldap3/core/connection.py\", line 389, in _do_auto_bind\n self.bind(read_server_info=True)\n File \"/usr/local/agent/pyenv/lib64/python3.9/site-packages/ldap3/core/connection.py\", line 607, in bind\n response = self.post_send_single_response(self.send('bindRequest', request, controls))\n File \"/usr/local/agent/pyenv/lib64/python3.9/site-packages/ldap3/strategy/sync.py\", line 160, in post_send_single_response\n responses, result = self.get_response(message_id)\n File \"/usr/local/agent/pyenv/lib64/python3.9/site-packages/ldap3/strategy/base.py\", line 370, in get_response\n raise LDAPSessionTerminatedByServerError(self.connection.last_error)\nldap3.core.exceptions.LDAPSessionTerminatedByServerError: session terminated by server\n",

"exit_code": 1,

"file": "task/cluster/2406b111-2dae-4181-97e3-985eee5affc3",

"output": ""

}

}

When i try to create one share again I get an error message containing:

{

"context": {

"action": "list-domain-groups",

"data": {

"domain": "ad.ijzereef.lan"

},

"extra": {

"eventId": "a1cb463e-a304-4310-acdd-80b36b9b1ced",

"isNotificationHidden": true,

"title": "List domain groups"

},

"id": "7c2d04b9-4a57-417a-b8f6-c1dbf7d7e6cd",

"parent": "",

"queue": "cluster/tasks",

"timestamp": "2023-05-22T20:30:07.051731154Z",

"user": "admin"

},

"status": "aborted",

"progress": 0,

"subTasks": [],

"validated": false,

"result": {

"error": "Traceback (most recent call last):\n File \"/var/lib/nethserver/cluster/actions/list-domain-groups/50list_groups\", line 33, in <module>\n groups = Ldapclient.factory(**domain).list_groups()\n File \"/usr/local/agent/pypkg/agent/ldapclient/__init__.py\", line 29, in factory\n return LdapclientAd(**kwargs)\n File \"/usr/local/agent/pypkg/agent/ldapclient/base.py\", line 37, in __init__\n self.ldapconn = ldap3.Connection(self.ldapsrv,\n File \"/usr/local/agent/pyenv/lib64/python3.9/site-packages/ldap3/core/connection.py\", line 363, in __init__\n self._do_auto_bind()\n File \"/usr/local/agent/pyenv/lib64/python3.9/site-packages/ldap3/core/connection.py\", line 389, in _do_auto_bind\n self.bind(read_server_info=True)\n File \"/usr/local/agent/pyenv/lib64/python3.9/site-packages/ldap3/core/connection.py\", line 607, in bind\n response = self.post_send_single_response(self.send('bindRequest', request, controls))\n File \"/usr/local/agent/pyenv/lib64/python3.9/site-packages/ldap3/strategy/sync.py\", line 160, in post_send_single_response\n responses, result = self.get_response(message_id)\n File \"/usr/local/agent/pyenv/lib64/python3.9/site-packages/ldap3/strategy/base.py\", line 370, in get_response\n raise LDAPSessionTerminatedByServerError(self.connection.last_error)\nldap3.core.exceptions.LDAPSessionTerminatedByServerError: session terminated by server\n",

"exit_code": 1,

"file": "task/cluster/7c2d04b9-4a57-417a-b8f6-c1dbf7d7e6cd",

"output": ""

}

}

Does your VM fulfill the system requirements?

Did you install from ISO or did you use an image? There were issues regarding the images.

Yes Markuz,

Fullfillments are met, 3 cpu, 1 TB storage and 4 Gig ram.

Installation from iso file

Thank you for testing Guus!

It seems the container is not running.

Can you check with the following commands?

First, enter the samba user: ssh samba1@localhost

Then, please copy & paste the output of the following:

systemctl --user status

podman ps -a

Hi @Guus

I assume you mean three cores allocated for the VM inside ESXi.

You are aware that ALL Multi-CPU operations, including Cores / Sockets run using “Symetric Processing”? See also Symmetric multiprocessing - Wikipedia

And in real life there has NEVER existed any system with three CPU cores (Or three CPUs on Sockets)?

So I’m wondering why you’re using this relativly inefficient three core concept?

The system has to make up for the non symetric hardware, using cpu cycles!

And for no actual benefit.

In fact, this unsymetric config can cause issues, especially in timing / IO operations…

Just as background info.

My 2 cents

Andy

I tried but bumped into the not known password for samba1

I will change the number of cores to 4 just to be shure

Andy,

no I didn’t realize the structure and just put in an extra third core. Corrected this in the meantime. Thanks for explaining.

It has been more of the thought that an extra core would speed up data transfer from backup to shares in the new structure as I’am talking of about 300 GB data.

Thanks

As root user I get following:

systemctl --user status

● yz4fs.ijzereef.lan

State: running

Units: 123 loaded (incl. loaded aliases)

Jobs: 0 queued

Failed: 0 units

Since: Tue 2023-05-23 15:03:01 CEST; 1min 56s ago

systemd: 252-13.el9_2

CGroup: /user.slice/user-0.slice/user@0.service

└─init.scope

├─3113 /usr/lib/systemd/systemd --user

└─3122 “(sd-pam)”

[root@yz4fs ~]# podman ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

de7b90b2c02f docker.io/grafana/promtail:2.7.3 -config.file=/etc… 2 hours ago Up 2 hours promtail1

433e6ca8d31b Package redis · GitHub redis-server /dat… 2 hours ago Up 2 hours redis

[root@yz4fs ~]#

It should not require a password, but just accept the SSH key.

Maybe in your installation the user is samba2 or samba3? You can figure it out using ls /home/

Due to a big project I must pospone the testing